Compiling The Machinery with Emscripten: Part 2 — Graphics

This blog series is about getting The Machinery to run in the web browser using Emscripten. I spent two Hack Days working on this.

On the first day, I got some basic command-line programs to run and print their output in a browser window.

In this part, I’ll make it more interesting by adding graphics and interactivity:

2D graphics and UI.

Web browsers use WebGL for 3D drawing — a variant of OpenGL. This creates some problems because The Machinery does not currently have an OpenGL backend. Instead, we use Vulkan, and we make heavy use of advanced Vulkan features that can’t be easily emulated in OpenGL.

While it is would be possible to add a new OpenGL-based rendering backend to The Machinery, it’s a pretty big task, well beyond the scope of what can be achieved in a single Hack Day. The Machinery is also geared towards “modern” rendering backends (Vulkan, DX12, Metal). Adding support for a “legacy” backend such as OpenGL would mean even more work.

So how can we get some graphics in the web browser?

Well if we don’t need to render everything, we don’t necessarily need a complete render backend. For my Hack Day project, I decided to just focus on getting 2D drawing to work. Since 2D drawing also powers the UI, if I could just get 2D drawing to work I should in theory be able to run any 2D/UI program built using The Machinery in the browser.

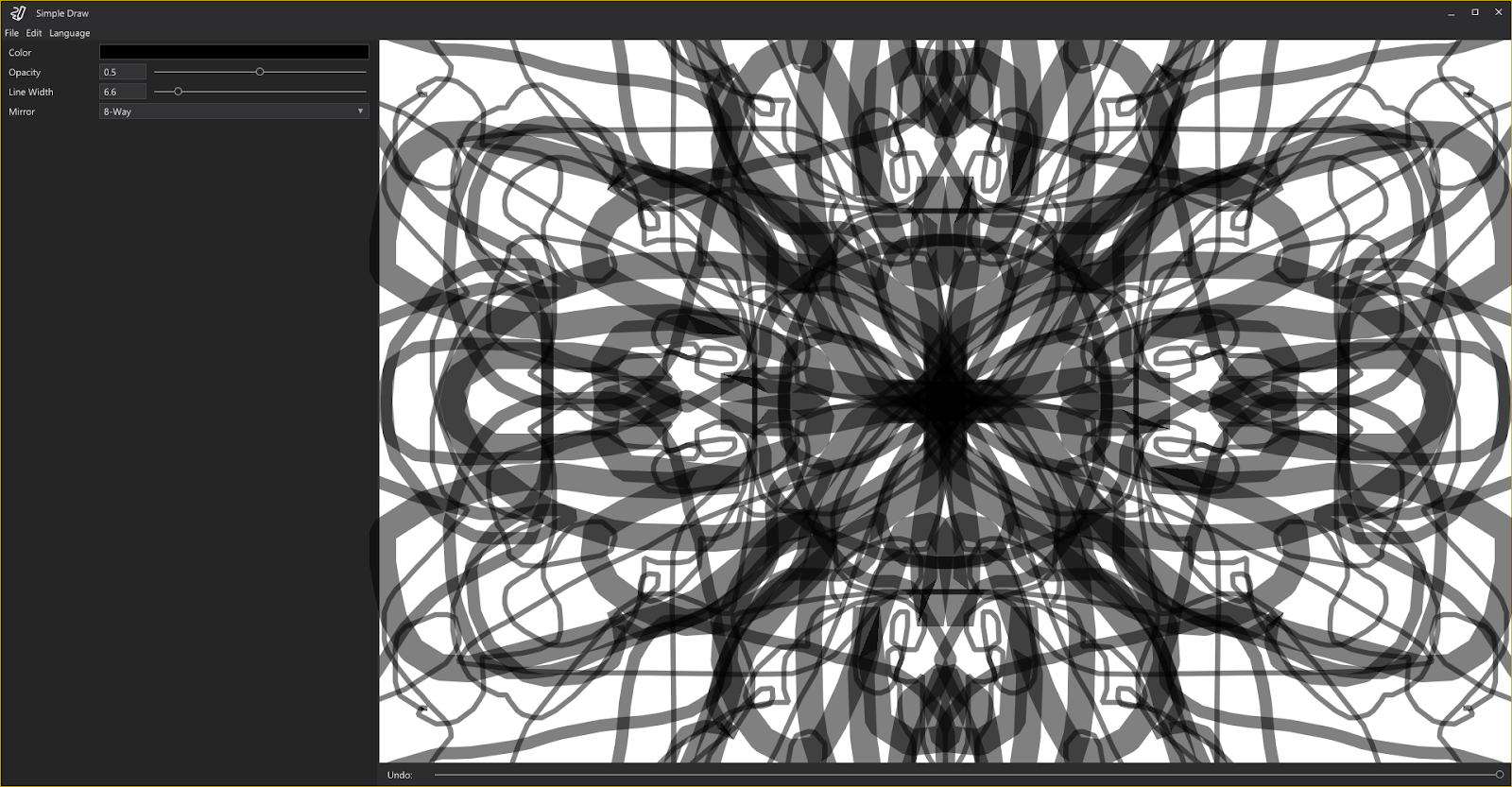

I like to have a concrete and focused goal to work against, so I decided to try to make Simple Draw work in the browser. Simple Draw is a simple 2D drawing program built in The Machinery. We ship it as a sample to show how The Machinery can be used as a framework to build all kinds of tools and applications (not just for game development):

Simple Draw.

There are many possible ways of implementing 2D drawing. For example, we could take all the 2D

drawing commands that are exposed in tm_draw2d_api such as tm_draw2d_api->fill_rect() and

implement them using OpenGL. But let’s try to do something a little bit more clever that takes

advantage of how 2D drawing works in The Machinery.

The blog post One Draw Call UI explains this in depth, but basically, everything that is drawn in The Machinery’s UI is gathered up into a single pair of vertex/index buffers and then drawn using a single draw call. (Actually, the vertex buffer is more like a “primitive buffer” — it doesn’t store vertices per se, it stores a representation of the primitives we want to draw that is unpacked by the vertex shader.)

For our purposes, this is kind of great, because it means we don’t have to figure out how to draw lines, rectangles, curves, etc using OpenGL. All we need to do is to figure out how to draw the vertex/index buffer pair. Unfortunately, we can’t just send them to OpenGL, because they use a bunch of Vulkan techniques that don’t work in OpenGL (e.g., non-uniform buffers and dependent reads). But it should be possible to fix that with a conversion step — code on the CPU that converts the Vulkan buffers to something that OpenGL can draw.

So here’s our plan:

- Draw the UI using normal

tm_ui_apiandtm_draw2d_apicalls. - Get the vertex and index buffers from the UI.

- Convert them into OpenGL-friendly buffers.

- Render them using OpenGL.

But before we get started on that, let’s make sure that we’re able to render anything.

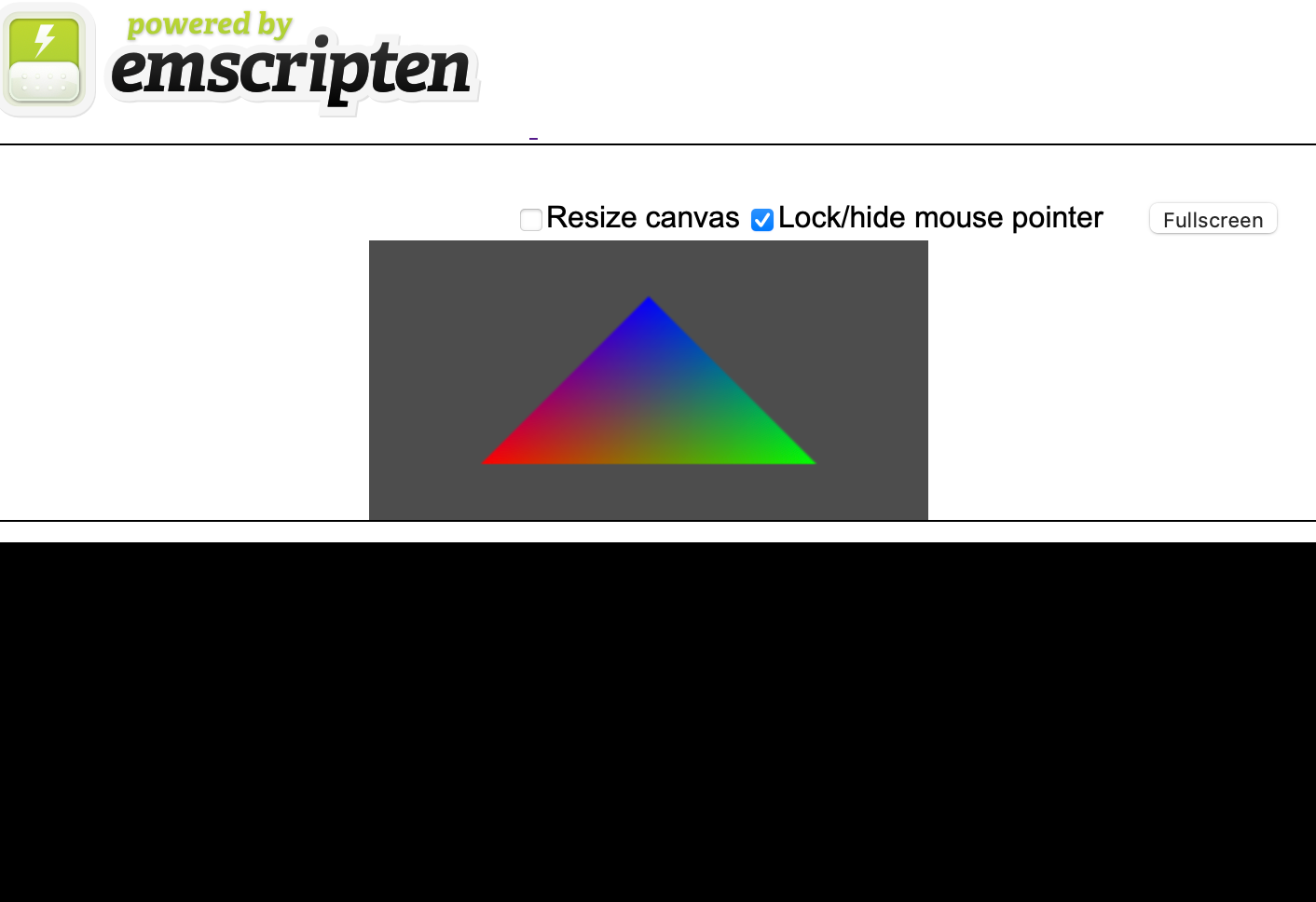

First Triangle

Whenever I’m working on graphics (which isn’t too often), I always start by downloading some sample code to get the first triangle up. It’s always tricky to know exactly what you need to do to get to that point — do you need to set up surfaces, cameras, viewports, etc — so it’s a lot easier to get started by iterating over something that’s already working. (That’s true for The Machinery too — if you want to make something with The Machinery, do yourself a favor and start with a sample instead of building from scratch.)

After a bit more Googling than I expected, I settled on webgl_draw_triangle.c

Here’s what it looks like when I compiled it and ran it in the browser:

OpenGL triangle from Emscripten.

All good, we have something drawing. Now let’s try to draw something using The Machinery.

Static Linking

The command-line programs from the first blog

post only needed

the foundation library to run. For our UI programs, we also need the ui library which has the

tm_draw2d_api and tm_ui_api APIs.

This presents a bit of a problem because, in our regular build setup, the ui library is

dynamically linked and loaded as a plugin, but our web application is a static executable.

(foundation doesn’t have this issue, because it’s always linked statically.)

It doesn’t seem to be that widely used, but Emscripten actually supports dynamic linking through

dlopen() so we have two choices:

- Figure out how to get

dlopen()and dynamic linking to work in Emscripten so that we can link theuiplugin dynamically. - Change the build configuration so that

uiis linked statically into the web application.

Since I couldn’t find a lot of tutorials or documentation about dynamic linking in Emscripten, I decided to go with the second option. (This was perhaps a mistake, I’ll get back to this later.)

Changing the linking options for the UI library is pretty straightforward, we can just tell Premake

to use static linking when we compile for the web target:

filter { "platforms:web" }

kind "StaticLib"

filter { "not platforms:web" }

kind "SharedLib"

The problem is that when we change the linking like this, we get a bunch of compile errors.

Dynamically linked libraries have their own “namespaces” for exported symbols. I. e., you can

have two dynamically linked libraries loaded that both have the symbol foobar() without running

into any trouble. You can retrieve the address of the exported foobar() symbol in a particular

library by calling dlsym(lib, "foobar") (or GetProcAddress(module, "foobar") on Windows).

In contrast, when you link statically, all global symbols end up in the same namespace. If you try

to statically link two libraries that both define foobar() you will get a linker error about

trying to define the symbol twice.

In some applications, this might not be a big deal, because there is only a small chance that you would use the same global symbol in two different libraries. Unfortunately for our purposes today, the coding conventions we use in The Machinery actually encourage the reuse of symbol names.

For example, all plugins in The Machinery use an exported function called tm_load_plugin() as

their entry point (this is the function called by the plugin system to initialize the plugin). This

works great with dynamic linking, but when linking statically, all those function names will

collide.

Another common pattern is to store API pointers in global variables:

struct tm_draw2d_api *tm_draw2d_api;

tm_draw2d_api = tm_get_api(reg, tm_draw2d_api);

This pattern will also cause name collisions when linking statically.

Maybe it would be a good idea to make our code a bit more statically-linking friendly so that users who wanted to build statically linked binaries could more easily do so.

But for now I just brute force hacked around the problem (it’s called a Hack Day after all). I

just sprinkled a bunch of #ifdef defined(TM_OS_WEB) all over the code to get rid of all the

duplicated symbols. I got everything compiling, but it’s not really a viable long-term solution.

In retrospect, it might have been better to try to get dynamic linking to work, though it’s hard

to know if there would have been any hidden dragons down that path.

Drawing the UI

Now that we have a triangle on the screen and access to the UI and Draw2D libraries, the next step

is to get some drawing from The Machinery into the web browser. The functions in the

tm_draw2d_api take an explicit vbuffer and ibuffer as arguments, so we can achieve this by:

- Create a

vbufferand anibuffer. - Use

tm_draw2d_api->fill_rect()etc to draw something into the buffers. - Convert the buffers to an OpenGL-friendly format.

- Draw the OpenGL buffers using

glDrawArrays().

The trickiest part here is the conversion step, so let’s dig into this in a little more detail.

The UI vertex buffer in The Machinery uses a compressed vertex format (actually a compressed

primitive format). For example, when we draw a rect, we don’t put each vertex of the rect in

the buffer. Instead, we just put the rect coordinates into the buffer as four floats {x, y, w, h}. The indices in the index buffer all point to this same rect struct. In addition, some bits in

the index are used to indicate what kind of primitive we are drawing (untextured rect) and others

indicate which corner of the rect each index represents. The vertex shader will then use the corner

bit flags together with the rect struct to compute the actual position of the vertex. This saves a

lot of vertex buffer memory compared to using regular vertices. And on modern platforms, memory

bandwidth rather than ALU performance is often the bottleneck.

Clipping is handled with a similar approach. If clipping is enabled for a rect, the rect’s struct in the vertex buffer will store a reference to the clipping rect. The reference, in this case, is actually an index to another location in the vertex buffer where we store the coordinates of the clipping rect. Note that this lets us reuse the clipping rect for multiple primitives. The vertex shader reads the clipping rect data using a dependent read and supplies it to the pixel shader.

The rect compression is not the only compression we have in the vertex buffer. We have similar approaches for other commonly drawn things. The text drawing is perhaps the most complicated one. In this case, an entire string of up to 32 characters is represented by a single entry in the vertex buffer.

To draw this using OpenGL, we loop over all the primitives (all the indices in the index buffer)

and expand them from the compressed format to uniform “fat” vertices that we can then draw

using a traditional glDrawArrays() call. The “fat” vertex format looks something like this:

struct vertex {

tm_vec2_t pos;

tm_vec4_t col;

tm_rect_t clip;

tm_vec2_t uv;

};

I.e., instead of being clever and referencing data in different locations, we just put all the crap we need into the vertex struct.

Our conversion function on the CPU will look pretty similar to the vertex shader function running on the GPU in the Vulkan version. It will compute the vertex position and the clip rect using the index data and dependent reads from the vertex buffer and use it to set up the fat vertex.

Note that this is pretty inefficient compared to what the Vulkan renderer does. Not only are we spending time on the conversion itself, but we also end up with a lot more data in the vertex buffer. But we’re not terribly concerned about performance right now, we just want to get it running.

The code for the conversion ends up looking something like this:

static inline void add_rect(struct vertex buffer,

tm_rect_t r_in, tm_color_srgb_t c, tm_rect_t clip,

tm_allocator_i *ta)

{

struct vertex *vs = *buffer;

const tm_vec4_t col = { c.r / 255.0f, c.g / 255.0f,

c.b / 255.0f, c.a / 255.0f };

const tm_rect_t r = rect_to_open_gl(r_in);

const struct vertex v[6] = {

{ tm_rect_bottom_left(r), col, clip },

{ tm_rect_top_left(r), col, clip },

{ tm_rect_top_right(r), col, clip },

{ tm_rect_top_right(r), col, clip },

{ tm_rect_bottom_right(r), col, clip },

{ tm_rect_bottom_left(r), col, clip },

};

tm_carray_push_array(vs, v, 6, ta);

*buffer = vs;

}

uint32_t *i = ibuffer->ibuffer;

uint32_t *end = i + ibuffer->in;

while (i < end) {

const uint32_t idx = *i;

const uint32_t primitive = idx & 0xfc000000;

const uint32_t word_offset = idx & 0x00ffffff;

const uint32_t byte_offset = word_offset * 4;

if (primitive == TM_DRAW2D_PRIMITIVE_RECT) {

tm_draw2d_rect_vertex_t *r = (tm_draw2d_rect_vertex_t *)(vbuffer->vbuffer + byte_offset);

const uint32_t clip = r->clip ? byte_offset - r->clip : 0;

add_rect(buffer, r->rect, r->color, get_clip_rect(vbuffer, clip), a);

i += 6;

}

}

This example shows the code for an untextured RECT primitive. I added similar code for all the

other primitive formats supported by tm_draw2d_api, verifying along the way that everything was

drawing correctly.

The GL shaders to draw the fat vertices are pretty straightforward:

const char vertex_shader[] =

"attribute vec4 apos;"

"attribute vec4 acolor;"

"attribute vec4 aclip;"

"attribute vec2 auv;"

"varying vec4 color;"

"varying vec4 clip;"

"varying vec2 texCoord;"

"void main() {"

" color = acolor;"

" clip = aclip;"

" texCoord = auv;"

" gl_Position = apos;"

"}";

const char fragment_shader[] =

"precision lowp float;"

"varying vec4 color;"

"varying vec4 clip;"

"varying vec2 texCoord;"

"uniform sampler2D texSampler;"

"void main() {"

" if (gl_FragCoord.x < clip.x || gl_FragCoord.x > clip.x + clip.z"

" || gl_FragCoord.y < clip.y || gl_FragCoord.y > clip.y + clip.w)"

" discard;"

" if (texCoord.x != 0.0 || texCoord.y != 0.0) {"

" gl_FragColor = texture2D(texSampler, texCoord);"

" gl_FragColor.rgb = color.rgb;"

" } else"

" gl_FragColor = color;"

"}";

Text Rendering

The most complicated part of rendering was to implement font rendering. First, because the text rendering uses the most complicated compression format in the primitive buffer. Second, because it needed texture support.

As I like to do, I approached this incrementally:

- Added support for rendering of textured rectangles. (Using an in-memory checkered texture that I generated with a couple of for-loops.)

- Changed the code to use a texture loaded from a file instead.

- Implemented text rendering using a simple texture where each ASCII letter was represented by a 16x16 pixel square. (You can find a bunch of such fonts with a quick google search.) Our text rendering system requires a font texture together with a description of where in the texture each character can be found, but this is pretty easy to do set up manually when the characters are all square.

- Implement support for our regular font generation system that loads TTF files and renders them

as bitmaps using

[stb_truetype.h](https://github.com/nothings/stb/blob/master/stb_truetype.h).

The trickiest part of this was actually the decoding of the compressed format. I won’t go into more detail on that since it depends a lot on the specific way we encode our fonts.

In addition, I also needed to add support for loading files in Emscripten so I could load the texture and font files.

Since Emscripten runs in the browser, it cannot access the local file system. Instead, Emscripten uses a Virtual File System, basically a file system hosted in memory. To make files available to this file system we need to tell Emscripten to package the files and preload them into the Virtual File System before the program starts running. We can do this with the following link option:

--preload-file utils/webgltest/[email protected]/data

This tells Emscripten to take the folder utils/webgltest/data and make it available in the

Virtual File System under the path /data. If we put our font file in

utils/webgltest/data/font.ttf we can then load it from the program with fopen("data/font.ttf", "rb").

Input

To support input, we need to connect the Emscripten input events to The Machinery’s input abstraction layer.

Receiving input events from Emscripten is pretty straightforward. You use functions such as

emscripten_set_mousemove_callback() to set callback functions that get called whenever an event

occurs. The callback functions convert these to tm_input_event_t events for The Machinery and

store them in a buffer that The Machinery’s input system can query.

This looks like something like this:

void add_mouse_event(const tm_input_event_t *e)

{

mouse_events[mouse_events_n % MOUSE_EVENTS_BUFFER_SIZE] = *e;

++mouse_events_n;

mouse_state[0][e->item_id] = e->data;

}

EM_BOOL mouse_event(int event_type, const EmscriptenMouseEvent *e, void *userdata)

{

tm_input_event_t ev = {

.time = tm_os_api->time->now().opaque,

.controller_id = mice[0],

.source = &mouse_input_source,

.type = TM_INPUT_EVENT_TYPE_DATA_CHANGE,

};

if (event_type == EMSCRIPTEN_EVENT_MOUSEMOVE) {

ev.item_id = TM_INPUT_MOUSE_ITEM_MOVE;

ev.data.f = (tm_vec4_t){ e->movementX, e->movementY, 0, 0 };

add_mouse_event(&ev);

ev.item_id = TM_INPUT_MOUSE_ITEM_POSITION;

ev.data.f = (tm_vec4_t){ e->targetX, e->targetY, 0, 0 };

add_mouse_event(&ev);

} else if (event_type == EMSCRIPTEN_EVENT_MOUSEDOWN || event_type == EMSCRIPTEN_EVENT_MOUSEUP) {

ev.item_id = e->button == 0 ? TM_INPUT_MOUSE_ITEM_BUTTON_LEFT : e->button == 1 ? TM_INPUT_MOUSE_ITEM_BUTTON_MIDDLE : TM_INPUT_MOUSE_ITEM_BUTTON_RIGHT;

ev.data.f.x = event_type == EMSCRIPTEN_EVENT_MOUSEDOWN ? 1.0f : 0.0f;

add_mouse_event(&ev);

}

return true;

}

There is a little more to it because we need to set up the Emscripten keyboard and mouse as new input controllers in The Machinery, but this is the gist of it.

I wanted to add support for touch events too so that Simple Draw would work on iPad. However, here

I ran into trouble. Calling emscripten_set_touchstart_callback() gave me link errors when testing

my program in Safari. Not exactly sure what was up with that and didn’t have time to investigate

it during this short Hack Day.

Simple Draw

With both drawing and input working, I was ready to start porting Simple Draw. Rather than filling

the existing Simple Draw code with a bunch of #ifdef I decided to just copy-paste it into my web

code.

Getting Simple Draw to work was pretty straightforward at this point, I only ran into a few minor snags, both related to memory.

First, I needed to add -s ALLOW_MEMORY_GROWTH=1 to the linker arguments to allow the memory use

to be dynamically sized. Without this, Emscripten will use a fixed amount of memory for the web

application.

Second, The Machinery uses some tricks from the Virtual Memory Tricks blog

post, such as reserving very large areas of

address space without committing it. This doesn’t really work under Emscripten since it doesn’t

have a full virtual memory manager. All memory you request is automatically committed. So I needed

some #ifdef to make sure that the memory allocations in Emscripten didn’t end up being

obscenely large and causing the program to run out of memory:

#if defined(TM_OS_WEB)

const uint64_t max_objects = 10 * 1024ULL;

tt->immutable_allocator = tm_allocator_api->create_fixed_vm(1 * 1024 * 1024, a->mem_scope);

#else

const uint64_t max_objects = 4 * 1024 * 1024 * 1024ULL;

tt->immutable_allocator = tm_allocator_api->create_fixed_vm(100 * 1024 * 1024, a->mem_scope);

#endif

With this, Simple Draw was up and running in the browser:

SimpleDraw running in Safari on OS X.

You can try it yourself using the link below:

And here’s the full source code:

Conclusions and Future Ideas

All in all, I’m pretty excited that I was able to get a UI application written in The Machinery up and running on the web in a single day, but there are a lot of things I would like to spend more time looking at in the future:

OS X/iOS Support

It should be possible to use the same approach as outlined in this post to run on other platforms where we don’t currently have a rendering backend. For example, this would let us run The Machinery on OS X or iOS without needing a full Metal2 backend.

Dynamic Loading

I’d like to investigate how dynamic loading works under Emscripten so that we can load more of

our plugins without resorting to sprinkling the code with #if defined(TM_OS_WEB) to avoid

duplicate symbol errors.

Run the Full Editor

If we can load all the plugins, nothing should prevent us from running the full The Machinery editor instead of just the Simple Draw sample. We should be able to run everything except the 3D viewports (they would just be black). This would let us test most of the engine’s functionality on a large number of platforms.

Viewport Streaming

To bring the full editor experience to the browser we would also need some kind of solution for the 3D viewports. One approach would be to provide an actual OpenGL rendering backend supported by Emscripten. The problem with this is that even if we did this, the engine is unlikely to run well inside the web browser on a low-power handheld device unless we have a completely separate rendering pipeline using cheaper algorithms.

Another approach would be to render the viewports on a high-powered machine in the cloud and just stream the content to the client device. This would let us run our normal rendering algorithms and render everything in full fidelity. And since the UI would still be rendered locally, it would be crisp and snappy, without compression artifacts or rendering lag. That could be an interesting combination.

I hope I’ll be able to explore some of these approaches in the future.