Step-by-step: Programming incrementally

One thing that has really benefited my productivity (and also my general sanity), has been learning how to take a big task and break it down into smaller, more manageable steps. Big tasks can be frightening and overwhelming, but if I just keep working on the list of smaller tasks, then somehow, as if by magic, the big task gets completed.

Adding value

When programming, I take a very specific approach to this breakdown. I make sure that each step is something that compiles, runs, passes all the tests*,* and *adds value* to the codebase*.* Exactly what “adds value” means is purposefully left vague, but it can be things like adding a small feature, fixing a bug, or taking a step towards refactoring the code into better shape (i.e., reducing technical debt).

An example of not adding value is adding a new feature, but also introducing ten new bugs. It’s not clear that the value of the feature outweighs the cost of the bugs, so it might be a net loss.

Another example of not adding value is making the UI prettier, but also make the app run ten times slower. Again, it’s not clear that the prettier look is worth the performance hit, so it might be a net loss.

Of course, I can’t always be sure that I’m not introducing bugs, and “value” is inherently subjective (how much performance is a new feature worth). The important part is the intention. My intention is to always add value with every single commit.

Basically, what I want to avoid is the “It has to get worse before it gets better”-attitude. Also known as: “The new system is the modern way to do it. Sure, it has some bugs and runs kind of slow right now, but once we’ve fixed that, it’s going to be way better than what we had before.” I’ve seen too many cases where these supposed fixes never happen and the new system, which was supposed to be better, just made things worse.

Plus, you know, it feels good to add value. If every day I can make a commit and that commit makes the engine better in some way, that makes me happy.

Pushing to master

In addition to implementing changes through a series of small commits, I also push every one of those small commits back to the master branch.

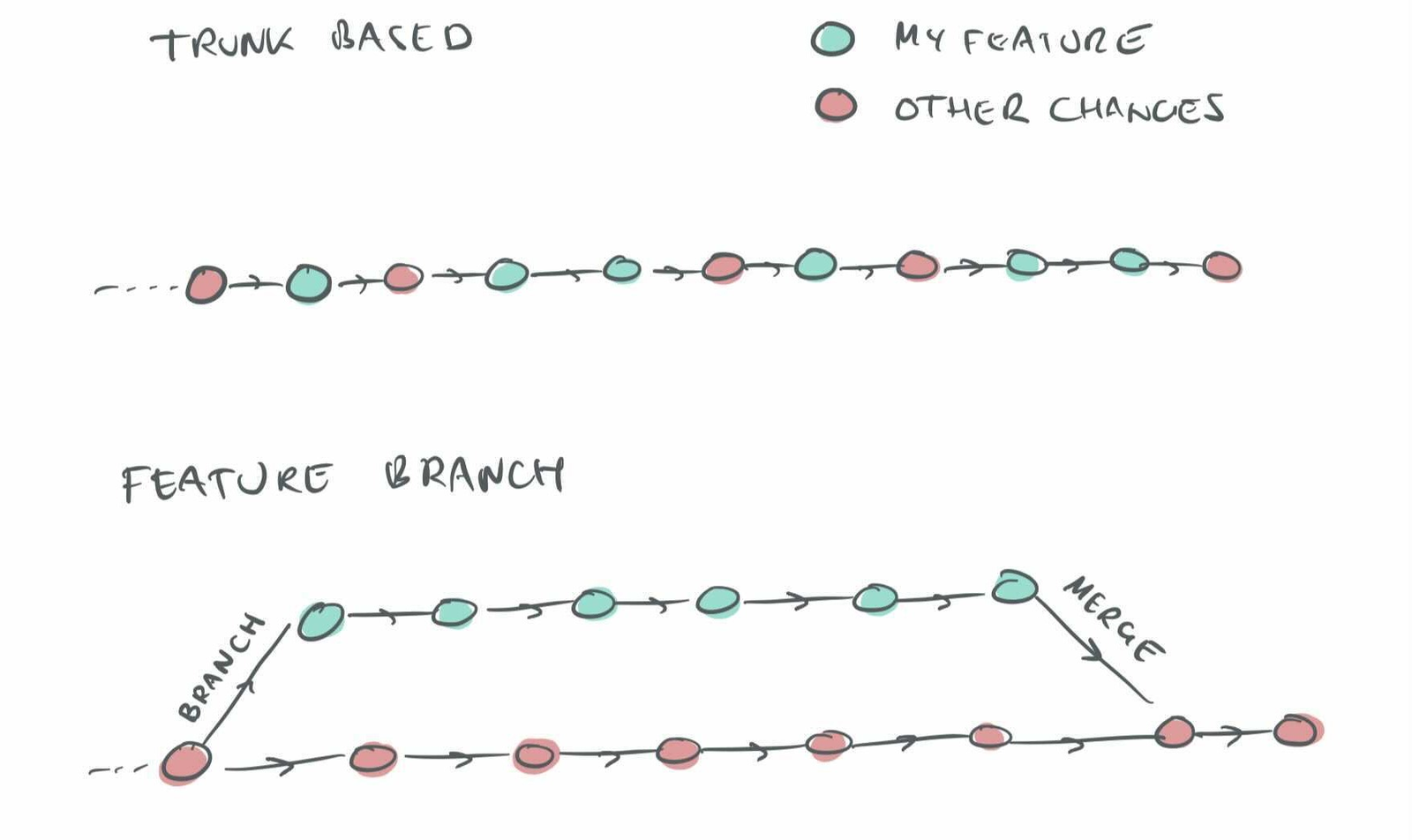

Note that this is the exact opposite of a feature branch workflow, instead, it is a form of trunk-based development:

-

In a feature branch workflow developers work on new features in isolated, separate branches of the code and don’t merge them back to master until they’re “complete”: fully working, debugged, documented, code reviewed, etc.

-

In the trunk-based approach, features are implemented as a series of small individual commits to the master branch itself. Care must be taken so that everything works even when the features are only “partially implemented”:

Trunk-based vs feature branch development.

Proponents of the feature branch approach claim that it is a safer way to work since changes to the feature branch don’t disrupt the master and cause bugs. Personally, I think this safety is illusory. Bugs in the feature branch just get hidden until it’s merged back to master when we suddenly get all the bugs.

Feature branches also go against my philosophy that every commit should add value. The whole idea behind a feature branch is: “we’re going to break a bunch of shit over here, but don’t worry, we’ll fix it before we merge back to master”. Better not to break stuff in the first place.

Here are some other advantages I see with the trunk-based approach:

-

Fewer merge conflicts. With long-running feature branches, the code in the branch drifts further and further away from the code in master, causing more and more merge conflicts. Dealing with these is a lot of busy work for programmers and it also risks introducing bugs. Some of these bugs won’t be seen until the branch is merged.

-

Less release-day chaos. Typically, all features scheduled for a certain release have the same deadline. This leads to all feature branches being merged just before the deadline. This means that we get all the merge and integration bugs at the same time, just before the release date. Getting a lot of bugs at the same time is a lot worse than having them spread out evenly. And getting them just before a release is due is the absolute worst time to get them.

-

No worry about the right time to merge. Since everybody knows that merging a feature branch tends to cause instability, this leads to worry about the “right time” to merge. You want to avoid merging right before a release (unless the feature is required for the release) to avoid introducing bugs in the release. So maybe just after the release has been made? But what if we need a hotfix for the release? While the merge is being held, valuable programmer time is being wasted.

-

No rush to merge. When working with feature branches, I often felt a hurriedness about getting the branches merged. Sometimes because a branch was needed for a specific release. But also often because the developer was tired of dealing with merge conflicts, wanted to get it over with, and move on to the next thing. Thus, the goal of only merging feature branches when they are “complete” was often compromised. (And of course, nothing is ever really “complete”.)

-

Easier to revert. If major issues are discovered after the merge of a feature branch (which often happens), there is often a lot of reluctance to revert the merge. Another big feature branch might already have been merged on top of it (since lots of feature branches often get merged at the same time, just before a release), and reverting it would cause total merge chaos. So instead of doing a calm, sensible rollback, the team has to scramble desperately to fix the issues before the release. With trunk-based development, any major issues would most likely have already been discovered. The final commit that makes the new feature “go live” is typically a simple one-line change that is painless to revert.

-

Partial work is shared. In trunk-based development, the partial work done on a feature is seen by all developers (in the master branch). Thus, everybody has a good idea of where the engine is going. Bugs, design flaws, and other issues can be discovered early. And it is easier for others to adapt their code to work with the new feature. It’s also easier to get an estimate of how much work is needed to complete a feature when everybody can see how far it has progressed.

-

Easier to pause and pick up later. Sometimes, work on a feature might have to be paused for a variety of reasons. There might be more critical issues that need to be addressed. Or the main developer of the feature might get sick, or have vacation coming up. This is a problem for feature branches because they tend to “rot” over time, as the code base drifts further and further away, causing more and more merge conflicts with the branch. Code that is checked into master does not “rot” in the same way.

-

Easier to address other bugs/refactors at the same time. When working on a feature or a problem, it is pretty common to find other, related problems, exposed by the work you are doing. In the trunk-based approach, this is not an issue. You would just make one or more separate commits to the trunk to fix those issues. With the feature branch approach, it is more tricky. I guess the right thing to do would be to branch off a new separate bug fix branch from master, fix the issue in that branch, merge that branch into the branch you are currently working on, and (once it passes code review) into master (so that other people get the bug fix before your feature branch is merged, because who knows when that will happen). But who has time for all that shit? So instead, people just fix the problem in their feature branch, and maybe cherry-pick it into master if they’re having a good day. So now, instead of being about a single isolated feature, the feature branch becomes a tangled mix of different features, bug fixes, and refactors.

The main challenge of the trunk-based approach is how to break a big task down into individual pieces. Especially, with the requirement that each piece should compile, run, add value, and be ready to be pushed into master. How can we push partial work without exposing users to half-baked, not yet fully working features?

Let’s look at some problems and how to solve them.

Problem #1: New features

An approach that works well for new features is to use a flag to control whether a feature is visible to end-users or not.

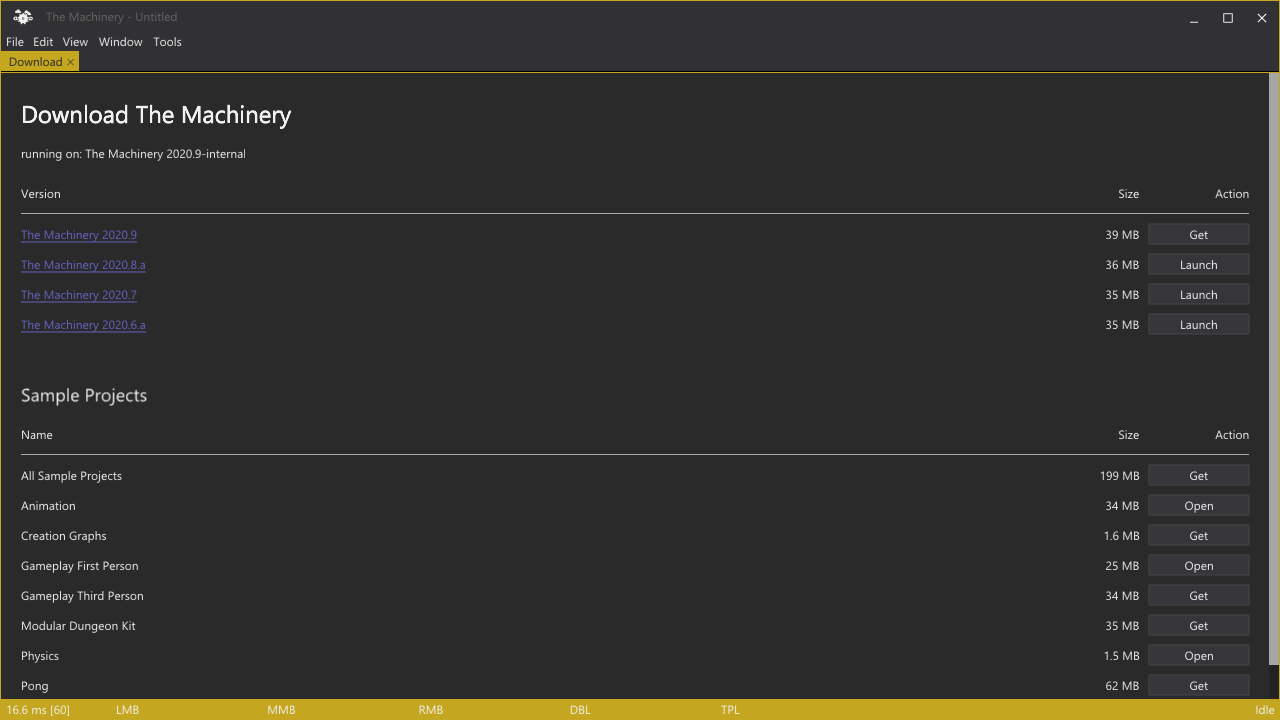

Let’s look at an example. A feature that I recently added to the engine was a Download tab that lets the users download new engine versions and sample projects from within the engine itself:

Download tab.

There are lots of different ways this could be broken down into smaller steps. Here’s an example:

- Add the Download tab to the menus and show a new blank tab when it’s opened.

- Show a (hard-coded) list of things to download (without working download buttons).

- Download the list of files from a server instead of having it hard-coded.

- Implement the

[Get]button by synchronously downloading the file when it’s clicked. - Switch to asynchronous, background downloading.

- Show a progress bar for the download.

- …

Normally, I don’t do a full breakdown like this upfront. Instead, I just kind of figure out the next logical step as I go along. I only sit down and do a serious planning session if the task is particularly tricky and incremental steps like this don’t come naturally.

To prevent end-users from seeing the tab before it’s actually working, I hide it behind a flag. This can be as simple as:

const bool download_tab_enabled = false;

// ...

if (download_tab_enabled) {

add_menu_item(tabs, "Download", open_download_tab);

}

To get the menu option to show the Download tab, you have to change the download_tab_enabled

flag to true and recompile.

We call these flags feature flags since they

selectively enable or disable individual features of the application. Once the feature is complete,

we can remove the flag and just leave the true code path.

There are three main ways of implementing a feature flag:

-

Through a

#definemacro that is checked by#ifdef. -

Through a

const boolin the code (as in the example above). -

Through a dynamic

boolvariable that is initialized from a config file, a menu option or an internal debug console.

Out of these options, I think the third one is the best. You want to expose your new code to as many people as possible. That way, they can find bugs in your code, if they refactor the codebase, they take your code into account, etc.

If you use a #define flag, other people on your team won’t even compile your code. Thus, one of

their changes could easily end up breaking your code. A const flag is better, because your code

will still be compiled, but since people can’t try the new feature without recompiling your code,

most people won’t bother.

With a dynamic flag, artists, producers, or end-users who don’t want to be bothered with rebuilding the application can just modify the config file and then give your new feature a test run.

When the time comes to release a feature, you simply flip the default value of the feature flag from

false to true and everybody will see the new feature. If there are issues and you need to

revert, you simply flip the flag back. Later, when the feature seems stable, you can get rid of the

flag and just keep the true path in the code.

You can even do a partial, staged rollout. E.g., you could set the flag to true for 1 % of the users and then slowly ramp that up while monitoring the crash logs and the forums for any issues. That way, if a problem is encountered, it will only affect a small number of users and you can quickly revert.

Problem #2: Rewrites

The above approach works well for new features, but what should you do if you are doing a major rewrite of an existing system?

In this case, it might be trickier to find an incremental step-by-step approach, because you might want to rip out large parts of the old system and it will take a while until the replacement code reaches feature parity.

It might be tempting to reach for the feature branch approach in this case, but again, I don’t think it’s the right strategy. A big problem is you will have two conflicting goals. On the one hand, you want to merge early so that people see the improved code and you get some testing on the rewrites. On the other hand, you want to delay it as long as possible, so that you can reach feature parity and iron out all the bugs. What usually happens is an unsatisfying mix of the two.

A better approach is to make a parallel implementation and let the engine have two copies of the same system.

If you are doing a major overhaul, you could start by just copying the entire system code to a new folder. If you are rewriting from the ground up, you could start with an empty folder.

Depending on the nature of the system you are replacing, you could either have both systems (the old and the new one) running in parallel, or you could have a feature flag that selects which of the systems should be the default. For example, if you are rewriting the physics simulation, you probably need a flag to select if you are using the old or the new one since you want all physics objects to live in the same simulation (otherwise they won’t interact). On the other hand, if you are rewriting the particle effect system, you could potentially have both systems running and just select for each played effect whether it should play in the old or the new system.

Having parallel implementations lets you do a much smoother transition from the old to the new system. Everyone on the team can easily test out the new system. You can compare it to the old one for feature completeness, stability, performance, etc. If applicable, you could even run automated tests to verify that the new system produces the exact same output as the old one. Once the new system has been thoroughly verified, you can change the flag and start using it as the default.

The parallel implementations also provide a much gentler upgrade path for end-users. Instead of tying everyone to the same “merge date”, you can just expose the system selection flag to the end-users. Users who are eager to try out the improved features in the new system can turn the flag on early, while users who favor stability or depend on certain quirks in the old system can decide to stay on it, even after the new one has become the default.

And there is no immediate rush to retire the old system once the new one has become the default, you can eventually deprecate and phase it out once the burden of maintaining it outweighs the value of having it around.

Problem #3: Refactoring

For our final problem, let’s consider something even trickier — making a big refactoring change to the entire codebase. It could be things like:

- Enabling a new warning such as

-Wshadowor-Wunused. - Changing the parameters of a commonly used function.

- Changing a commonly used type, such as switching from

std::stringto an internal string type.

The challenge with refactors like this is that:

-

They tend to touch a lot of the code, increasing the risk of merge conflicts.

-

It’s often tricky to see how to do them incrementally. For example, if you change the parameters of a function, you must update all the call sites. Otherwise, the code simply won’t compile.

In some cases, it can be pretty straightforward how to approach the problem incrementally. For

example, when we add a new warning such as -Wshadow, the fixes we do in order to make the code

compile with the warning do not cause any problems when compiling the code without the warning.

So we can simply turn on the warning, fix a bunch of the errors we get (however many makes a

suitably sized commit), turn the warning off again, and then commit the result. Rinse and repeat

until all the warnings are fixed and then do a final commit that turns the -Wshadow flag on for

everybody.

In other cases, we can employ the parallel implementation strategy. Suppose we have a function that is used all over the code to allocate memory:

void *mem_alloc(uint64_t bytes);

Now we want to add a system parameter so that all memory allocations can be tagged as belonging to a particular system (gameplay, graphics, sound, animation, etc):

void *mem_alloc(uint64_t bytes, enum system sys);

If we just added the parameter like this, the code wouldn’t compile until we fixed all the call sites. I.e., the incremental approach would not work.

What we can do instead is introduce a new parallel function:

void *mem_alloc(uint64_t bytes);

void *mem_alloc_new(uint64_t bytes, enum system sys);

Now we can incrementally transition all of the code to use mem_alloc_new() instead of

mem_alloc(). Once we have transitioned all of the code, we can remove mem_alloc() and then do a

global search-and-replace that renames mem_alloc_new() → mem_alloc().

The final challenge is the trickiest one of them all — changing one of the fundamental types in the codebase.

In fact, I recently had a run-in with this, which is what prompted me to write this whole blog post. In my case, I wanted to refactor our codebase to introduce a new type to represent the IDs of objects in The Truth. The Truth is our main data store in The Machinery. All the data that the editor works on is stored in The Truth, and every object in The Truth is referenced by its ID.

We used to represent Truth IDs with just an uint64_t, but since we have lots of other values that

are also represented by uint64_ts this was causing increasing confusion. In order to provide

better documentation of where a Truth ID is expected and some type-safety against passing other

uint64_t values, we decided to switch from using a plain uint64_t to wrapping it in a struct:

typedef struct tm_tt_id_t {

uint64_t u64;

} tm_id_tt_t;

Note that since Truth IDs are used everywhere, this change impacts thousands of lines in the code.

Somewhat arrogantly, I still set out to do this refactor as a single concentrated push. I know I should do things incrementally, that’s what this whole blog post is about, but sometimes I mess up.

As I worked on it, I realized this change was a lot bigger than I had originally thought and there was no way I was going to be able to finish it in a single sitting. I started to get that sinking feeling that things were spiraling out of control. I was changing thousands of lines of code. Was I really sure that I wasn’t introducing typos anywhere?

A lot of the changes were changing things like:

if (!object_a) {

into:

if (!object_a.u64) {

Was I really 100 % sure that I wasn’t sometimes typing:

if (object_a.u64) {

instead? Running some tests would have increased my confidence, but since the code wouldn’t compile until I had fixed everything, I couldn’t run any tests.

Then I started to get merge conflicts with other people’s changes which I had to resolve by hand, hoping that I understood their code well enough not to break anything. It became harder and harder to picture the consequences of all the changes. How many bugs was I introducing?

In the past, I’ve often tried to deal with these “panicky” feelings by “powering through”. Starting to pull long late-night coding sessions to try to wrestle this thing that is slipping away from me back under control. Get all my changes in before someone else has a chance to push anything so that I don’t have to deal with the merge conflicts. (Which of course just means that they have to deal with the merge conflicts instead. Ha ha!)

But these days I’m maybe a little bit wiser? I realize that these feelings are a sign that I have bitten off more than I could chew and that the right response is not to push forward, but instead take a step back, reflect and try to reformulate the problem so it can be approached in a series of smaller steps instead. Depending on how much trouble I’ve gotten myself into, I might be able to salvage some of the changes or I might just throw it all away and start over with an iterative approach. Even when I’ve had to throw everything away, I’ve never regretted it. In the end, working in a series of small, controlled steps is so much more productive that I feel like I always gained back the time I “lost”.

Back to the problem at hand. I knew I wanted to change the type incrementally. I.e., change it in

some files, but not in others, and still be able to compile and test the code. But how could I

possibly do that, when everything depends on everything else? Once I change some parameters to use

tm_tt_id_t, anything that calls it with uint64_t will produce an error.

My key insight was that this situation is very similar to the one of enabling -Wshadow. That

transition was easy to do because we changed the code from compiling just under -Wno-shadow so

that it compiles under both -Wshadow and -Wno-shadow. We can do this change incrementally

until we’re finally ready to turn on -Wshadow.

Let’s do the same thing here. We’ll call the type of the ID objects ID_TYPE. Right now, our code

compiles when ID_TYPE is uint64_t. Our goal is to rewrite that code so that it compiles both

when ID_TYPE is uint64_t and when it is tm_tt_id_t. If we can incrementally transition to that

state, then once all the code compiles when ID_TYPE is tm_tt_id_t we can make that the default.

Here’s a simple example to show how this works:

uint64_t get_data(uint64_t asset)

{

const tm_the_truth_object_o *asset_r = tm_tt_read(tt, asset);

uint64_t data = api->get_subobject(tt, asset_r, TM_TT_PROP__ASSET__OBJECT);

return data;

}

To make this independent of the value of ID_TYPE, we can rewrite it as:

ID_TYPE get_data(ID_TYPE asset)

{

const tm_the_truth_object_o *asset_r = tm_tt_read(tt, asset);

ID_TYPE data = api->get_subobject(tt, asset_r, TM_TT_PROP__ASSET__OBJECT);

return data;

}

This works regardless of whether ID_TYPE is uint64_t or tm_tt_id_t. In our header file, we put

something like this:

typedef struct tm_tt_id_t {

uint64_t u64;

} tm_tt_id_t;

#define TM_NEW_ID_TYPE 0

#if TM_NEW_ID_TYPE

typedef tm_tt_id_t ID_TYPE;

#else

typedef uint64_t ID_TYPE;

#endif

We can now commit and push our get_data() function without needing to change anything else in the

code. Everything will still compile because ID_TYPE is by default defined as uint64_t, so all

the places that call get_data() with an uint64_t will still work. We’ve taken a small,

incremental step towards switching out the ID type.

To take another step, just as we did with -Wshadow, we set TM_NEW_ID_TYPE=1 locally. This will

produce thousands of errors, but we don’t have to fix them all at once. We just fix a few (whatever

is a suitable commit chunk) by modifying the code just as we did with get_data(). Then we change

TM_NEW_ID_TYPE back to 0 and check that everything still compiles and runs OK. If it does, we can

commit those changes. Rinse and repeat until the whole codebase is fixed.

There might be some situations where just changing uint64_t to ID_TYPE doesn’t work. For

example, there is some code in the codebase that looks like this:

bool objects_equal(uint64_t a, uint64_t b)

{

return a == b;

}

Since we are writing strict C code, we can’t implement operator== for tm_tt_id_t. We need to

compare the objects with:

return a.u64 == b.u64;

(Side note: I often wish that == would work on C structs. The compiler should determine whether the

struct contains any padding bytes. If not, == should be implemented as memcmp, otherwise, as a

member-by-member comparison.)

Again, our goal is to replace the old code with new code that compiles for both possible values of

ID_TYPE. This means we can’t just write a.u64, because that won’t compile when a is an

uint64_t. But we can make use of the preprocessor once again and write something like this:

#if TM_NEW_ID_TYPE

#define TO_U64(x) ((x).u64)

#else

#define TO_U64(x) (x)

#endif

Here, the TO_U64 macro will produce an uint64_t from an ID_TYPE object, regardless of whether

the ID_TYPE is wrapped or not. Now, we can rewrite the objects_equal() function as:

bool objects_equal(ID_TYPE a, ID_TYPE b)

{

return TO_U64(a) == TO_U64(b);

}

And the code will compile with both the new ID type and the old one.

There are other ways of doing this too. For example, instead of TO_U64(x), we could have created

an ID_EQUAL(a,b) macro.

The main point is that by making use of the preprocessor, it should be possible to take whatever

code we have and rewrite it in such a way that it compiles with both possible values for

TM_NEW_ID_TYPE. Once we can do that, we can incrementally transition more and more code to the new

type.

Once the whole code is converted we can disable the TM_NEW_ID_TYPE=0 code path and then

(incrementally, if we so desire) remove all the transitional macros, ID_TYPE, TO_U64, etc by

replacing them with their TM_NEW_ID_TYPE=1 values.

Using this approach, I was able to completely transition over from uint64_t to tm_tt_id_t with

10-15 incremental commits over a couple of days, while all the time compiling and testing to make

sure nothing was broken by the changes. Commits from other team members didn’t cause many merge

issues, because my commits were all short-lived (an hour or so of work) and didn’t touch that many

files. This big and scary change was suddenly very manageable.

Conclusions

I think the incremental pattern can be applied everywhere. It just requires a little bit of ingenuity to reformulate the problem in an incremental way and this is something that you get better at with practice.

Doing things incrementally has a bit more overhead. For example, we weren’t able to change object

to object.u64 in one go, we had to transition via TO_U64(object). However, I think that extra

time is soon earned back. By working incrementally you can make calm, steady, confident progress

towards your goal, and in the end that allows you to work a lot faster. For example, with the ID

type change, I was able to make a lot of changes using global search-and-replace with regular

expressions. If I hadn’t been able to regularly compile and test my code, it would have been a lot

harder to trust that the search-and-replace was doing the right thing.

Also, the C preprocessor is kind of a weird thing to have in the language, but sometimes it’s really neat that it’s there, when you need to do stuff like this.