Summer Fun with Creation Graphs

This week’s blog is a guest post from Karll Henning, who has been experimenting with Creation Graphs. Thank you so much Karll!

Feel free to read the post in detail or skim over it. Even though it’s long, the first half is mostly context and making an argument that can come off as abstract depending on your familiarity. The second half is easier to read and it’s a walkthrough of the things I have done and would like to show. While the post itself isn’t technical, it assumes some familiarity with the game development landscape in the last decade, especially the technical art area, and uses language that can be rather abstract depending on your familiarity. This is mostly because I’m looking for a balance on making it accessible without hurting the point being made or the content.

Creating games is getting more complicated, some games aim for higher fidelity worlds, others aim for bigger worlds, and in some cases both. Bigger worlds often means storing more data, streaming that data, and render or simulate said data as a world for the player.

Higher fidelity is rather open to interpretation and may have different meanings, this could mean better graphics, dynamic weather systems, physics, audio or whatever takes us closer to the game design, regardless, it all sums up to more data.

More data by itself wouldn’t be a major problem, but it is usually accompanied by higher complexity,

and more complex data means more complicated workflows and data processing. Years ago we were able

to make a tiling brick texture with grass in between, a low poly mesh, call them roadMesh.obj

(mesh) and brickWithGrass.png (texture), and we would get away with it!

But now we want more fidelity! We want to make the brick pop out, we want the tiling pattern to not be visible, we want the grass to pop out and be dynamic! Intuitively we could:

-

Brute force it: Model the road on a brick-detail level and create enough variations – It’s simple and straightforward, but that will eat many artist-hours, precious storage and it’s not very flexible.

-

Alternative to save resources: Model the road with less detail, use a heightmap to apply on the fly hardware tesselation to the mesh, and place grass meshes on top – Not as simple, but a lot more flexible and saves resources.

The alternative is how modern game development usually approaches this issue, and rightfully so, resources in a game are very limited. But this approach inevitably adds complexity, every time we save resources and move closer to that fidelity-performance sweet spot, we are adding complexity that is now inherited by the workflow during all development.

It doesn’t help that every game has a unique combination of requirements, which will motivate a different set of compromises, it’s not just that games are becoming highly complex, each game has its own high complexity. Check this Frame Breakdown Compilation by Adrian Courrèges, these are limited to the rendering side of things, but give a good idea of how each game has its own approach and set of compromises.

There is so much more now, we have dozens of ways to do lighting, global illumination, shadowing, anti-aliasing, reflections, and much more. Depending on the game, for each mesh asset, we may want a visual mesh, a shadow mesh (not even a mesh, maybe a capsule representation!), a collision mesh, and to make everything worse, we may want to stream all this data with multiple level-of-details, some data should be streamed, others computed and amortized. We can keep adding complexities all day long.

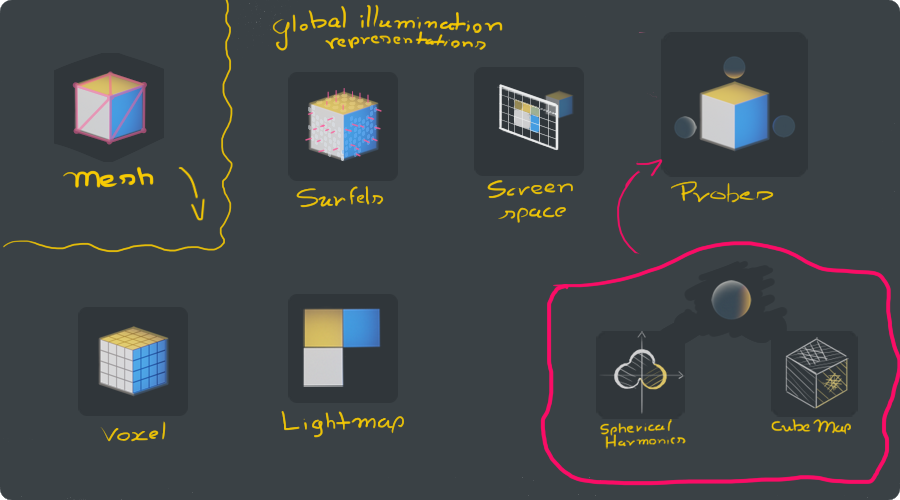

The lighting information around a scene can be represented and stored in several ways. A game may even use a combination of solutions listed above, depending on the game's needs.

Now, what does any of this have to do with Creation Graphs? In my humble personal opinion: Everything! Games weren’t always this complex, we could get by having the artist and programmer work being almost independent of each other, but the path for higher fidelity tends to shorten this gap. One example is programmable shaders causing the rise of technical art, many artists learn programming specifically because shaders allow them to create more dynamic content.

The issue is, games have evolved a lot, but workflows and tools haven’t proportionally received the same love and weren’t able to keep up with the complexity nor technical advancements.

We don’t have tools and workflows fit to handle these case-specific high complexities, and it’s unlikely we will solve this problem by endlessly adding black box solutions to a game engine.

Creation Graphs

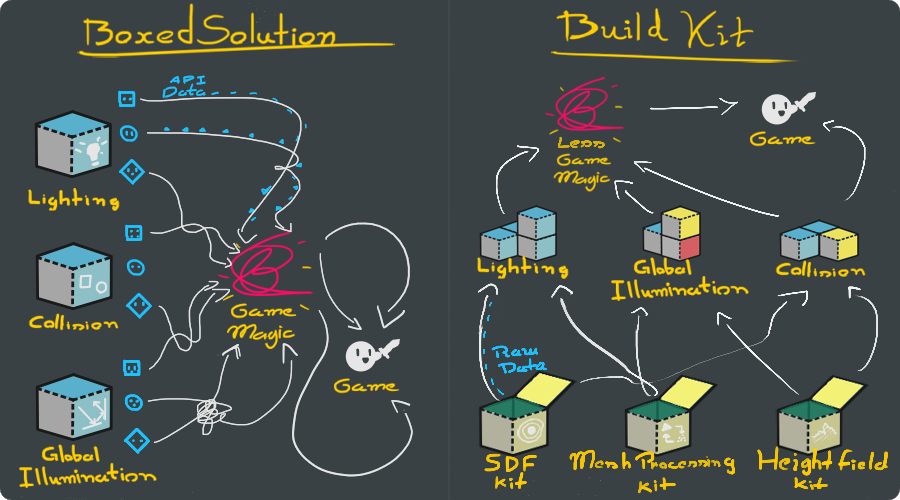

That’s why I was eager to try Creation Graphs, instead of providing a black box solution for importing assets, processing, and rendering them, a toolbox is provided for you to build a solution! I would like to joke that this is the IKEA of asset management, but it’s closer to a customizable LEGO collection.

Left: Usually, game engines provide boxed solutions with the most common use cases, this works well until your solution becomes more specific or you need data internal to the API, that may or not be exposed.

Right: The Machinery plugin-based approach and Creation Graphs allow for customizing workflows and building your own solutions like a building kit.

With all this in mind and an enthusiasm for research, this last summer (southern hemisphere) I went on to explore Creation Graphs. I want to see what it can do, how it feels to use and try to handle cases I have experienced in the past.

The inspiration was born directly from this The Machinery 2020.6 update

announcement. In the update, Creation

Graphs got the Render Pass Node with the intention to move it towards a more generic

data-processing framework.

With that in mind, I have done a series of experiments separated into three sections: Procedural Textures, Grass Placement, and Signed Distance Fields. I haven’t chosen these for any specific technical reason, they just felt diverse enough and things I would like to play around with.

I won’t go into details about how to implement similar effects, as this would easily explode the length of this post. But I will try to cover each one of them enough to illustrate the possibilities and how Creation Graphs help with that.

Procedural Textures

I wanted to start with small tasks and build up from there, but not too small that it isn’t relevant. One common situation when I’m prototyping a new project is that I would like everything to be as lightweight as possible and not look terrible.

I don’t want to open Substance Designer just to create some placeholder textures and import them. That’s already way too much work for something that small!

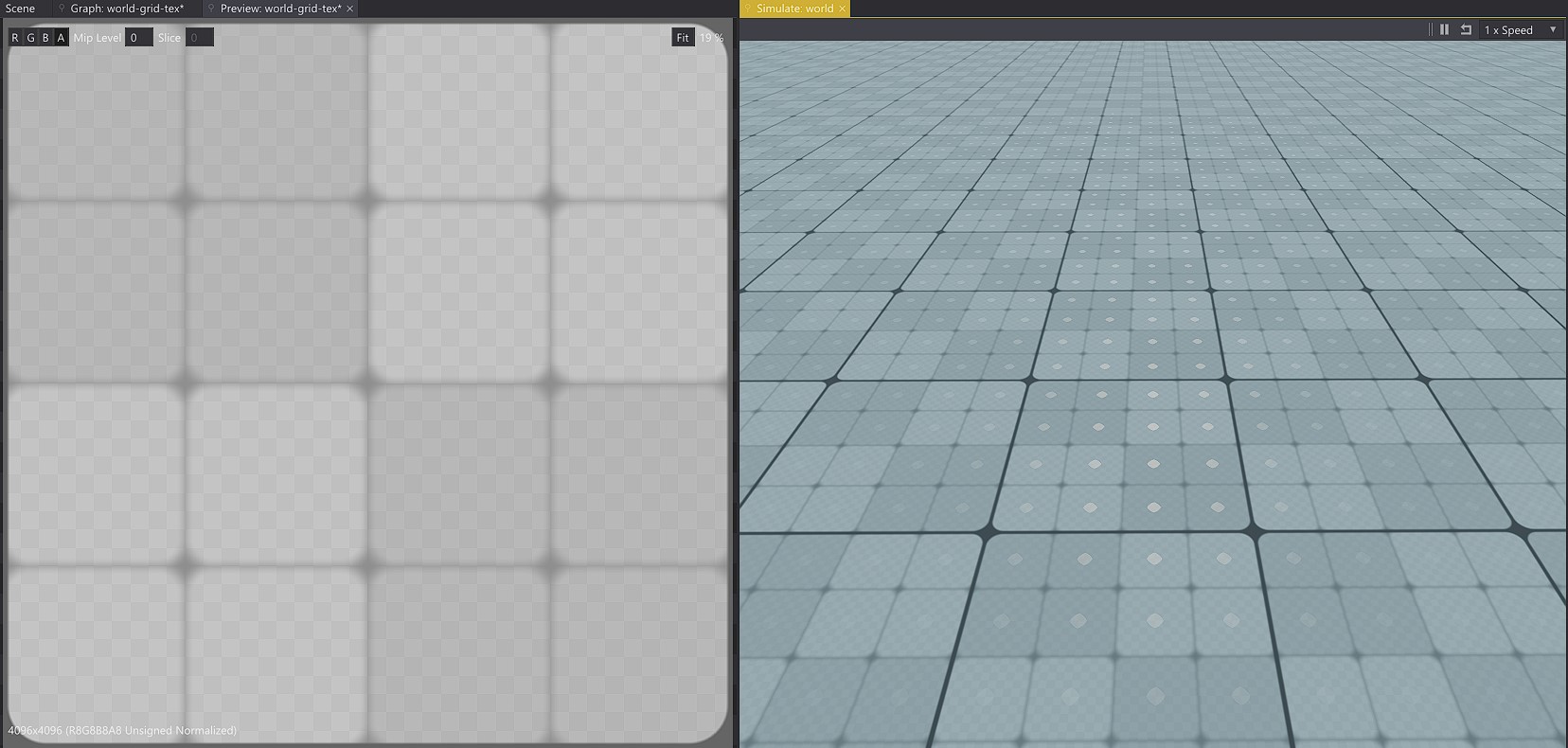

My first idea was: can I use Creation Graphs to create a procedural texture? In other engines, it’s not uncommon to have a node editor that allows you to create your own shader, but you are rarely able to precompute a texture and load it into a material.

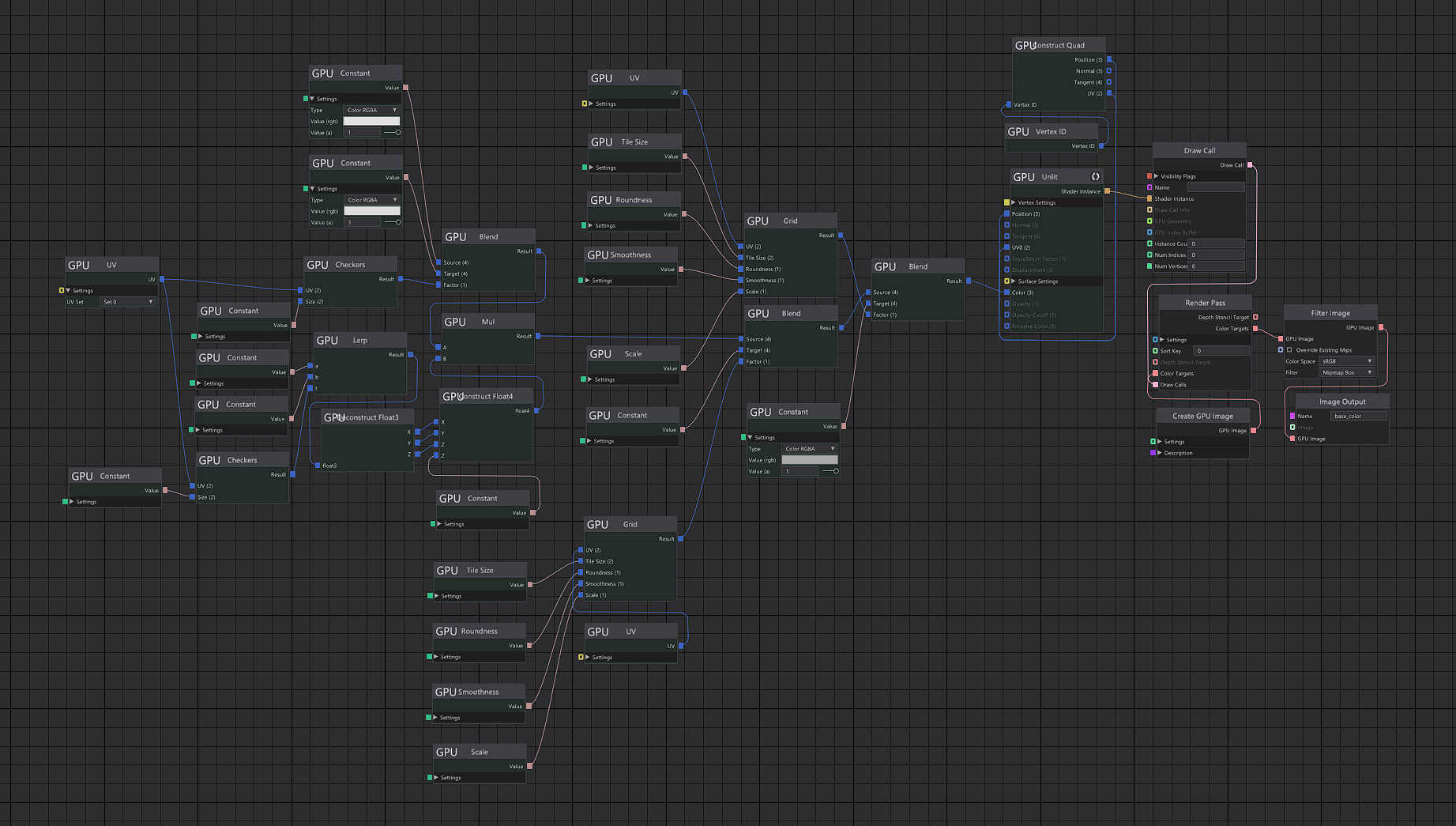

I had to create a few nodes myself, but it worked well enough! I have a Creation Graph that creates the following texture and I can easily import it into other materials. Doing this I have a lightweight texture that I can easily import into my projects without bloating the file size, no need to rely on external programs, and can change parameters as I feel like!

Grid-like texture procedurally generated and its respective creation graph.

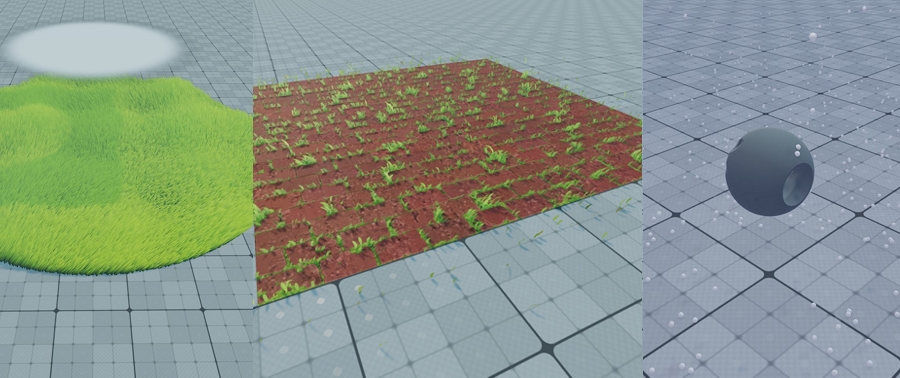

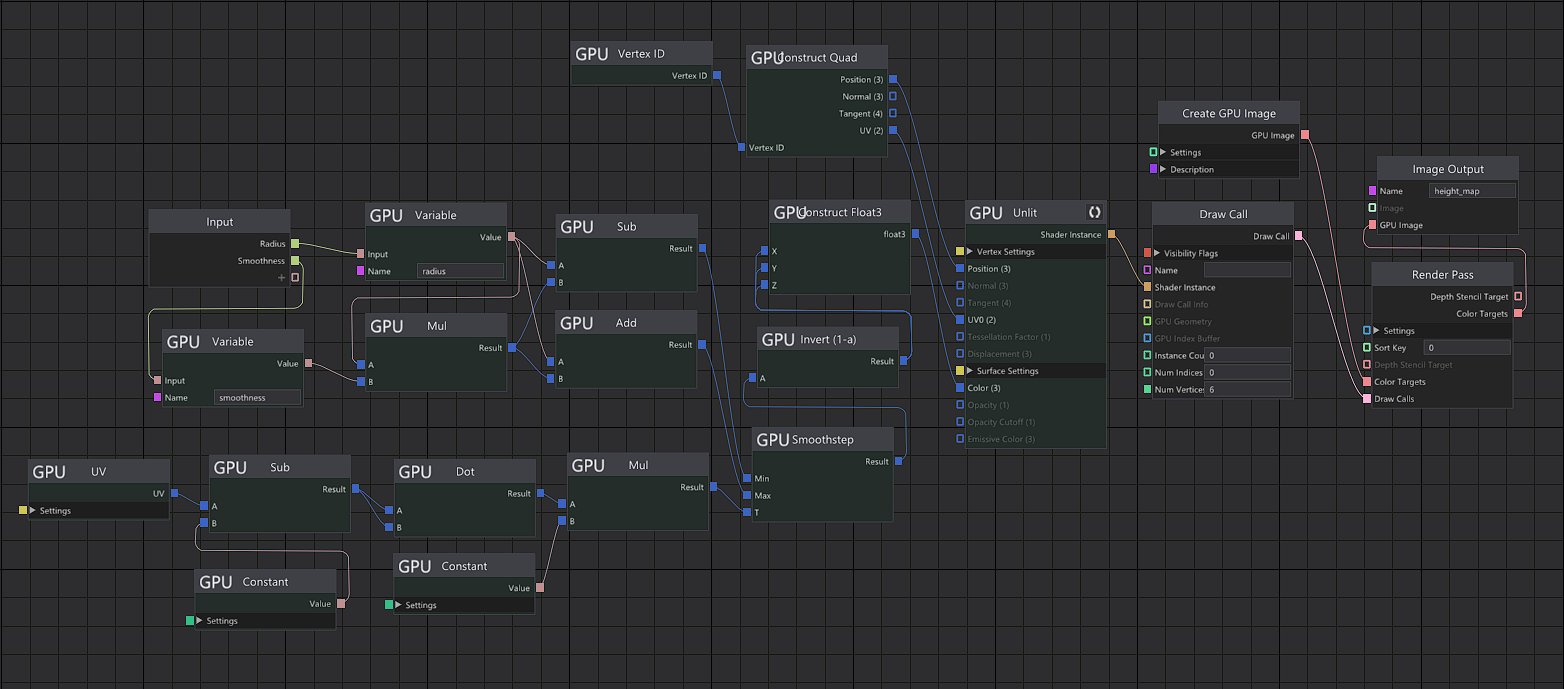

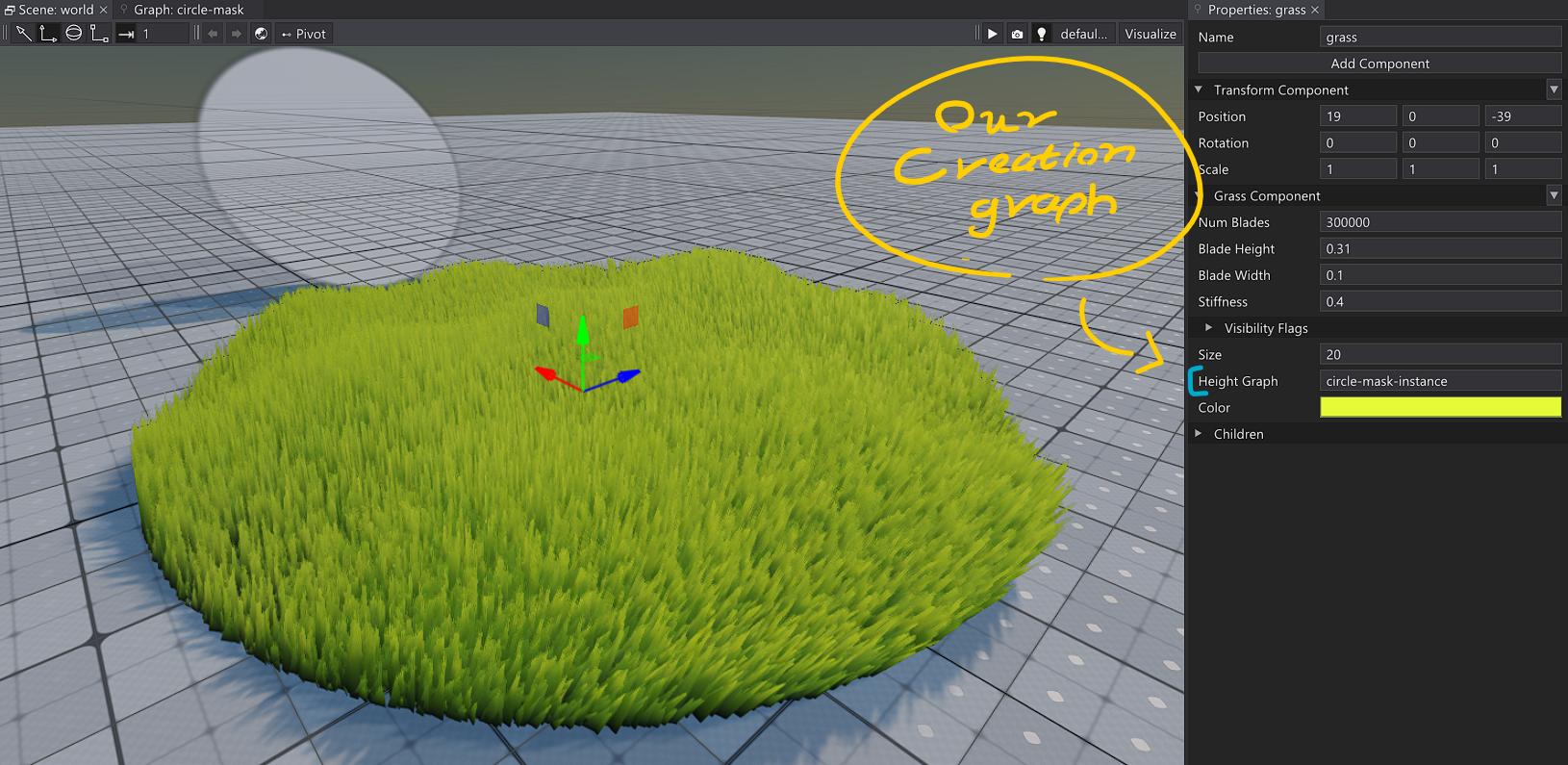

One other thing I wanted to try was to import a Creation Graph into a component. Luckily, in a previous project I created a Grass Component, starting from there I can add a Creation Graph with a texture output as a parameter to mask the grass. The result was something like this:

Grass component being masked by a procedural circle mask.

Above: `circle-mask` creation graph drawing a circle mask texture.

Below: Grass component with circle-mask creation graph as a parameter.

Grass Placement

Earlier in this post, I used an example of a tileable brick texture with grass in between the bricks. This is what we are playing with in this part of the post! We have the following tileable brick texture:

Left: Our monotonous tileable brick texture.

Right: The same texture as a surfel cloud.

And as an artist, we would love to break the pattern, monotony and bring life to this flat bunch of dried clay. We could achieve this by adding dynamic grass meshes on top of the green regions.

We can randomly sample the texture using the GPUSim nodes. We emit a fixed quantity of particles in random positions, using the particles' data channels to store the color and roughness at their respective positions.

This is enough to acquire a surfel cloud (image above) or point cloud, which are useful data representation for global illumination algorithms and mesh processing. Personally, I just like the brush-like look we can get from this. And the flexibility to get some cool effects:

Surfel cloud being offset in a wavy manner.

Now we can move the samples to a random direction when they fall outside a green surface, eventually they end up in a green area. I find this approach useful because we are amortizing the sampling calculation, and this is useful for streaming data, doing calculations on the fly, and getting interactive editing tools for data heavy operations.

Most importantly, this is the foundation for distribution grass meshes over our surface! After

exposing many of my grass component functionalities as nodes, we are able to reuse the same GPUsim

nodes to sample and simulate the grass. Resulting in this:

The above video shows the grass being progressively sampled over multiple frames. This allows amortizing the computation, which means distributing the computational load over multiple frames instead of doing it all in a single frame.

While distributing grass over a surface may not an impressive use case itself, the foundation used to build it is powerful and flexible. Typically this behavior would hardcoded as a scattering tool or grass painting tool. If we need to bake the position of each grass into a texture for ambient occlusion or a force map, we are probably out of luck if our lighting and collision solutions need this.

Creation graphs make this a lot easier as now we can output the positions and reuse them for our baking needs! It’s a lot more flexibility than a traditional approach allows, even allowing to bring operations closer to the game, operations that usually would be done in an external asset authoring tool.

Signed Distance Fields

Talking about collision solutions, we often need to transform data from one representation to another, e.g. we usually render triangle meshes because they are cheap to render. On the other hand, they are terrible for collision calculations, so for collision, we tend to represent the same mesh as a group of simple primitives (cubes, spheres, capsules).

This is enough for general gameplay reasons, but it can’t cover every single case, for example, it’s very inefficient to represent a terrain using simple primitives.

Another case is that we need a much finer collision for particles and simulations, and a much faster way to compute it. An efficient representation for collisions is Signed Distance Fields (SDF), we could bake the meshes as SDFs using 3D textures (aka Volume Textures) and use them for particle collisions. What I just described is approximately the solution Bluepoint Games used in the Demon’s Souls remake!

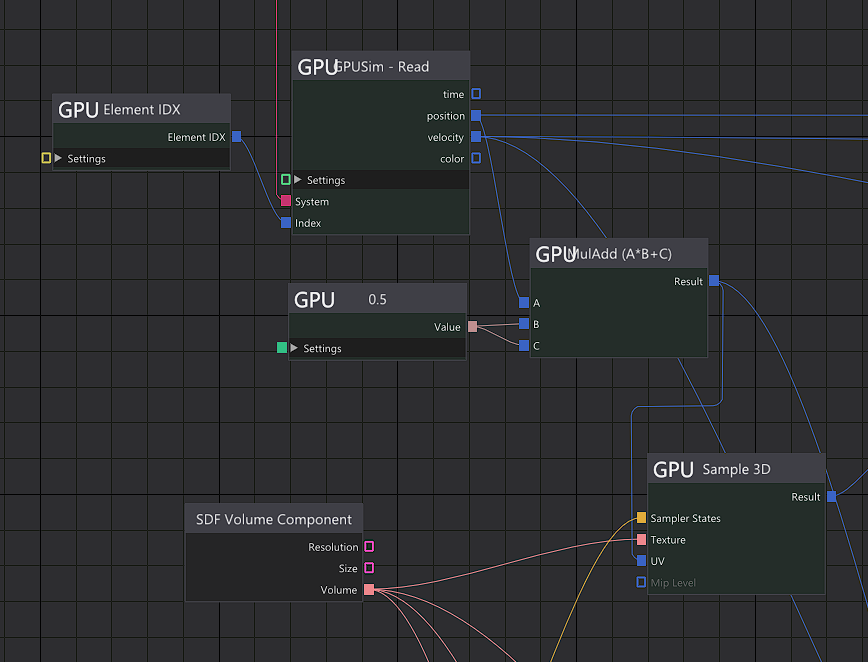

This section covers computing an SDF in an SDF Volume component, exposing the volume as a Creation

Graph node, and using it to handle collision of a simple particle system. I started to run out of

time (self-imposed deadline) and couldn’t implement a Mesh to SDF node, nor organize the node and

component setup in a more presentable way. As an example, there is only one Creation Graph handling

both particle simulation and SDF calculation, and it looks like

this.

I don’t think it’s worth the effort trying to show in more detail each part, as it’s complex and full of “shortcuts”. Putting that aside, it’s still worth taking a look at how we are exposing the SDF (baked by the SDF Volume Component) to the particle simulation:

Close up on SDF Volume Component node.

Overall this custom node was simple to create, and it’s rewarding to be able to make it work with the simulation without having to deal with API shenanigans or wondering if the particle system supports SDF collision. The foundation, the blocks are all there and easily allow me to load the data and make them interact as I wish, no waiting for features, no API gymnastics, just data!

As I mentioned earlier, due to time constraints we aren’t implementing a Mesh to SDF node (totally

possible though!). Instead, we will model it by

hand, something complex

enough to show what SDFs are capable of.

Simulation with thousands of particles interacting with a complex and convex SDF shape. In a game, we would probably have it as an alternative representation that isn't visible to the player.

The video above shows a particle simulation interacting with a complex shape. This kind of interaction is easy and efficient to do with SDFs, highlighting the importance of making data processing natural to a game engine. As mentioned earlier in this section, the most common boxed solution is composing primitives to represent more complex shapes, that approach would be laborious and inefficient in this case.

Conclusion

In the first section, I made a point about the growing complexity in game development, how tools and workflows haven’t been able to keep up with the advances made in graphics development. The ones to feel this discomfort the most are technical artists, they often are the bridge connecting art and programming, two areas that need to communicate more and more as complexity grows.

Personally, I believe Creation Graphs (allied with the plugin-based approach in The Machinery) are a great response to this ever-growing complexity. They don’t try to hide the complexity by making things simple, they expose it with a set of tools and make the complexity manageable!

It comes at a cost though, it’s a brand new approach. Doing something new while changing paradigms is hard and risky, it opens so many design, UX, and technical questions. I had three major difficulties while working with Creation Graphs:

-

Discoverability: Many things weren’t intuitive, you can do so much in a single creation graph that it becomes hard to look at one node and understand its context or how it can be used with another node.

-

Organization: What’s the easier way to organize my nodes and Creation Graph files?

-

Visualization: I wish it were easier to visualize the data flow. What does my data look like at this node if I input this?

Those would be much easier to answer if this path were already explored, we can learn from others' mistakes and so. While a lot can be taken from other node editors (popularized in the last decade), the Creation Graph offers a lot more flexibility than usual.

I believe these are already a concern of The Machinery team, but it’s worth bringing up in this post because… well, I have experienced these issues! Regardless, I hope this paradigm will become more commonplace in game development, in my opinion, there is a lot of unexplored potential.

I look forward to a future where plugin developers will provide solutions and creation graph nodes. So you can use something premade or customize it for your own needs.

Premade easy-to-use solutions are far from being a one size fits all, hiding the complexities in the underlying data. We have to embrace that data comes in all forms and shapes, from different places, and at any time, a decent foundation for visualizing and controlling data flow should be more of a priority in game development.

For the future, I would like to take these experiments further and try Creation Graphs for developing tools in game development. I would have done this in this post, but time is limited and I shouldn’t push myself too hard, it’s important to understand and be accepting of our own limits as well.

Be safe, late Merry Christmas and Happy New Year for y’all!