The (Machinery) Network Frontier, Part 2

In this part of the series, we’ll take a look at some high-level constructs that leverage the basic concepts we saw in part 1 and, more importantly, how those concepts are exposed to the final user in the editor.

Without further ado, let’s start.

Network Node Asset

A Network Node Asset defines what a Network Node should do with regards to the network: should this node accept connection requests from other nodes? What systems/engines should this node’s simulation run? Should changes that happen to entities be sent to other nodes? Etc. Stated more concisely: every Network Node in The Machinery is an instance of a specific Network Node Asset.

A Network Node Asset is an asset just like any other kind of asset: it can be imported, exported, modified, and reused as you wish directly in the editor.

The idea behind this concept is that we don’t want to support just the classic Client-Server

model in The Machinery: we want to be a bit more flexible and allow any networking model the

end-user can think of. (Still, we will ship with predefined server and client Network Node

Assets, ready to be used and customized for your specific needs.)

Virtual Network Simulation

The Network API abstracts away the internet completely, meaning that two nodes that run in the same The Machinery Exe instance (imagine you are testing a server-client game in the editor) will communicate in the exact same way (to the user’s eyes) as two nodes that are on two separate machines, far away from each other. This was very important to us as it means that to debug a multiplayer game, the users can just launch multiple network nodes from within the editor, and debug the game as if those instances were running on different machines, communicating over the Internet.

To do this, the open_pipe and send APIs are implemented so that if the node you want to talk to

is local, then no messages are sent at all, but everything is done locally in the same process, and

the receiver interfaces are called instantaneously, without making any copies of the data.

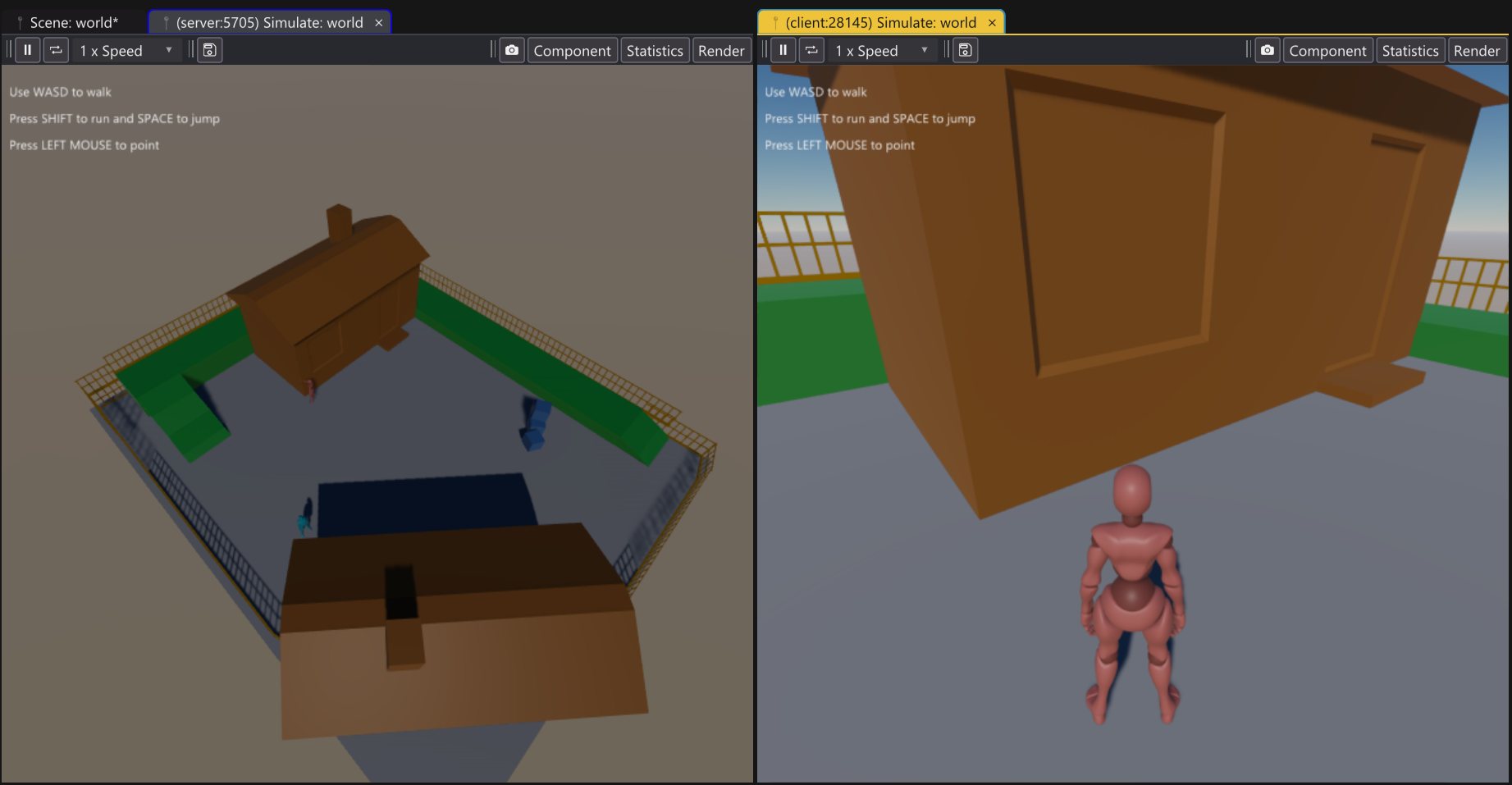

As a concrete example, you can simulate two different Network Nodes in two different Simulate

tabs in The Machinery (a Server Node and a Client Node), and no code needs to be adjusted to

make it work across the internet later on when you publish the game.

Two Network Nodes running in two different Simulate Tabs.

Component Synchronization

A simulation in The Machinery contains a number of Entities, each of which is constructed from Components. Multiplayer games are implemented by synchronizing the state of components over the network.

When registering a component, a tm_component_network_replication_i interface can be added to

define if and how the component should be synchronized: if none is provided, the component won’t

be synchronized at all.

As discussed in part 1, one of the goals of the multiplayer implementation was to provide an easy (possibly inefficient) “default” way to synchronize components across the network. It should be possible for the user to experiment with multiplayer quickly, and then later go in and do the necessary optimizations to make it run well over low-bandwidth connections.

In the tm_component_network_replication_i interface, you can achieve this by setting the

watch_timer to a non-zero value x. If you do, the component will be checked for changes every

x seconds, and if there are any changes, they will be replicated across the network.

Note: remember to flag the entities you want to be replicated as in the Entity Tree. Entities

that aren’t marked for replication won’t ever be replicated, even if their components have a

network_replication_i interface.

typedef struct replicated_component

{

float foo;

float bar;

} replicated_component;

static tm_component_network_replication_i *replicated_component_network_replication

= &(tm_component_network_replication_i){

.watch_timer = 1.0f,

};

tm_component_i component = {

.name = "replicated component",

.bytes = sizeof(replicated_component),

.network_replication = replicated_component_network_replication,

};

tm_entity_api->register_component(ctx, &component);

With this setup, in a client-server game, the server will check the replicated_component of the

synchronized entities every second and, if some changes are detected, send those changes to the

clients. Note that with this setup, the entire component will always be sent across the network,

even if only one of the members was changed. This is because we haven’t told the network system

anything about the struct layout, so all it can do is transmit it as a single binary blob. For more

efficient transmission, you can provide a description of the layout and provide a per-member

watch_timer.

Gamestate Synchronization

The Simulation API and the Entity API make use of the Gamestate system to synchronize the state of two separate entity contexts.

It does this by performing the following operations every frame:

tm_entity_api->propagate_network_changes_to_gamestate()is called by the simulation. If automatic member/struct watches were configured on the components you asked to be replicated, they are run here so that the Gamestate is notified of those changes.tm_gamestate_api->dump_uncompressed_changes()is called. All the changes that happened last frame are extracted and dumped to a buffer (or multiple buffers).- The changes are sent to all nodes that are “interested” in receiving updates of our entity

context, using the

tm_network_api->send()call. - if a new connection is detected, we need to send it the complete Gamestate, not just the most

recent changes:

tm_gamestate_api->dump_all()is called in this case and once again the state is sent using thetm_network_api->send()call. - The receivers will have a receiver interface attached (it is attached by default to the

clientnetwork node asset,) that callstm_gamestate_api->load_uncompressed_changes()every time an update is received. - The Gamestate notifies the Entity Context about changes that were loaded in, and the Entity Context will apply them: note that this is exactly the same thing that happens when you load a saved game from disk.

Graph Event and Variable Synchronization

It is possible to replicate graph events and variables across the network as well: just use the

corresponding *_variable_network_replication or trigger_event_network_replication graph nodes:

in addition to setting the variable/ trigger the event locally, these nodes will also inform

other connected nodes about those updates.

When a graph event is replicated, we just send the event string hash together with the entity identifier: the receiver will call the event on the specified entity. All the graph event types marked with “Receive in order” will be received one after the other, while events not marked with “Received in order” can potentially be received out of order.

Example 1: Both the events foo and bar are marked with “Receive in order”.

If n1 sends the following sequence to n2: foo1, bar1, foo2, bar2 then n2 will execute

the events in the exact same order.

Example 2: foo is marked with “Receive in order”, bar it’s not. If n1 sends the same

sequence, foo1, bar1, foo2, bar2 it is guaranteed that foo2 is executed after foo1,

but there’s no guarantee that bar2 will be executed after bar1, or even after the foo

events… the events could arrive in any of these orders:

bar1,foo1,foo2,bar2bar1,foo1,foo2,bar2bar1,bar2,foo1,foo2bar2,bar1,foo1,foo2bar2,foo1,foo2,bar1

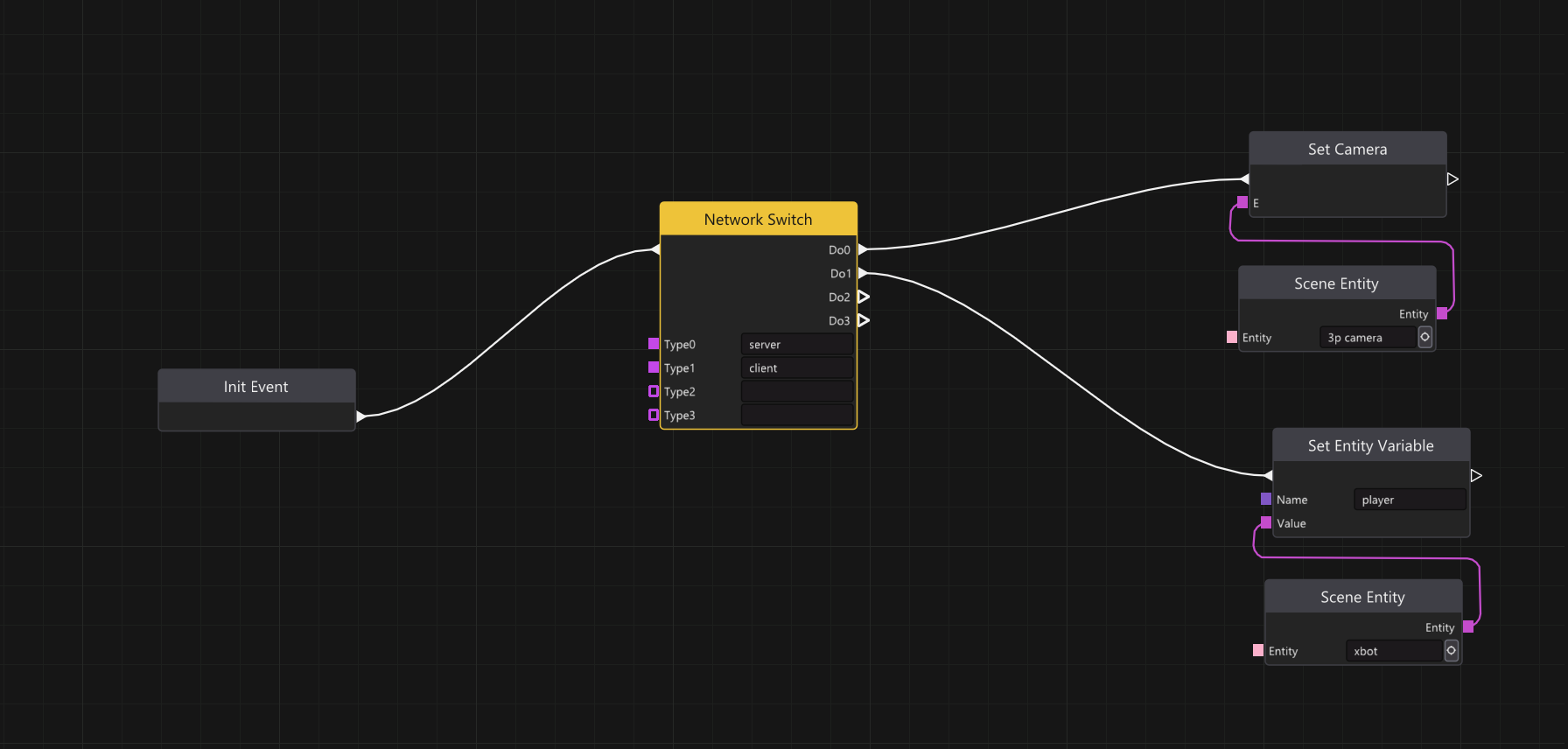

The Network Switch Graph Node

To make it as easy as possible to transition from single-player to multiplayer and vice-versa, the

network switch graph node was introduced for use in Entity Graphs.

It works pretty much like a switch statement in C, but the variable that’s checked is the type

of the network node of the simulation: for example, the graph might take a different path if the

type of the node is server rather than client.

If a network node doesn’t have an associated name, it is assumed to be a “catch-all” simulation and ALL the execution paths will be executed in sequence: this should make it trivial to publish the single-player version of a multiplayer game.

Server and Client taking different paths.

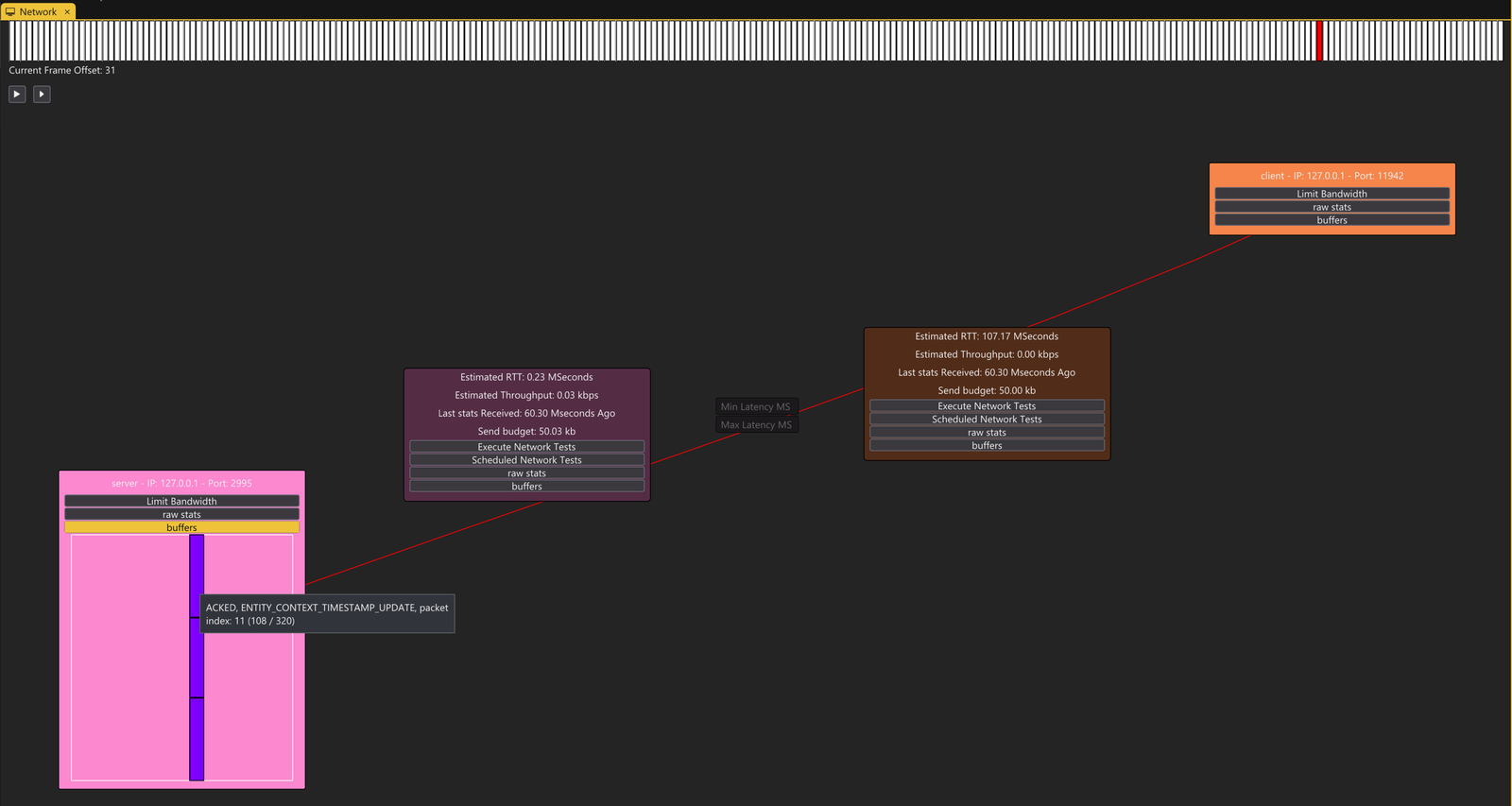

Network Profiler

The Network Profiler allows the user to see what nodes are currently “involved” in the simulation that is being run in the editor, and also to alter and monitor the conditions of the network at a specific point in time: you can artificially add latency to a Pipe or limit the upload/download bandwidth of a Network Node.

It is possible to pause the simulation by just pressing the pause button (all the running Simulation Instance will be paused at the same time). You can then inspect what packets are being sent/received by the network nodes. Packet statuses are shown so that questions like “how many packets of this specific type have been acked the last frame?” or “how many out of order packets there are?” can be answered.

You can access the network profiler by opening the Tab > Debugging > Network tab.

Inspecting packet buffers with the Network Profiler.

OK, You now know everything you need to know to start experimenting with multiplayer games in The Machinery… we wish you no network bugs for the rest of your life!

As always we’d love to hear any feedback, suggestions, and critique from you, here in the comments or on discord.

See you around,

Leonardo

Wait… why are you still reading? Go check out the new shiny multiplayer features of The Machinery!

Ok, ok… for those of you interested in the hardcore stuff, we’ll take a look at it next time around… stay tuned.