Scenes in The Machinery are built using an Entity-Component-System (ECS), where components are written as plugins. This means that we need some way to efficiently feed the renderer from the ECS. In today’s post I will walk you through how that works by doing a breakdown of the steps we go through on the CPU side to render a frame of The Machinery editor.

This post assumes knowledge about various concepts that I’ve covered in earlier blogs posts, so if you haven’t read them I encourage you to do so before continuing:

“A Modern Rendering Architecture” — Our graphics API abstraction, responsible for generating an intermediate representation (IR) that each rendering backend later translates into actual graphics API calls.

“High-Level Rendering Using Render Graphs” — Our system for defining and scheduling what GPU work needs to be done for a specific view. I.e., when and how various buffers and render targets are populated with things like lighting, shadows, post processing, etc.

“The Machinery Shader System” (+ part 2 & 3) — Our high-level system for authoring and managing shaders.

“Simple Parallel Rendering” — Describes the benefits of completely decoupling GPU frame scheduling from the CPU rendering code.

Also worth noting before we begin is that our goal is to build technology that is highly modular, therefore the ECS in itself is also a plugin, opening up for the possibility to roll your own system for modelling scenes if desired. Bear in mind though that the editor that we ship together with The Machinery is built around our entity component system and a lot of features we develop are exposed through it.

Feeding the renderer

What does it mean “to feed the renderer” from the ECS? From my point of view it’s about coming up with a few simple, but yet powerful and efficient, interfaces that make it easy for plugin authors to do stuff like:

Introducing new types of renderable objects and have them interact correctly with the view-frustum culling system and the Render Graph (which is responsible for defining how the final rendered image is assembled on the GPU). This is done by implementing various callbacks of an interface called

tm_ci_render_i.Introducing new types of auxiliary objects that feed data into the Render Graph and Shader System to make the final rendered image beautiful in various ways. A few examples: lights, decal projectors, reflection probes, post processing volumes/settings. These auxiliary objects may or may not have a location in space. If they do, they may or may not want to run through the view frustum culling system. This is achieved by implementing various callbacks of an interface called

tm_ci_shader_i.Dynamically extend the running Render Graph with more passes exposed through modules defined in a plugin. This is also handled by implementing a callback in the

tm_ci_shader_iinterface.Introducing new views to render the “scene” from and feed the result into the Render Graph. E.g., rending of shadow maps and reflection probes. This is done during the execution of the Render Graph by first extending it with a plugin defined module using the

tm_ci_shader_iinterface. This works because the scheduling of the GPU work is completely decoupled from the scheduling of the CPU work as described in my post about “Simple Parallel Rendering”.

On top of this, we need the code that calls these interfaces to run fast, like really fast. We are not talking about dealing with hundreds or thousands of these user defined components, we want to be able to handle hundreds of thousands of them each frame. Or at least we aim to be able to handle that, if the plugin code is well-written and designed for it.

To achieve that we have to be cache friendly and make it easy to go as wide as possible across all available worker cores. But at the same time we also want our interfaces to be simple to use without having to enforce too strict rules on the plugin author. A tricky balance…

Frame breakdown

To explain how we achieve this using the tm_ci_shader_i and tm_ci_render_i

interfaces mentioned above, let’s do a high-level breakdown of rendering a frame

in The Machinery editor.

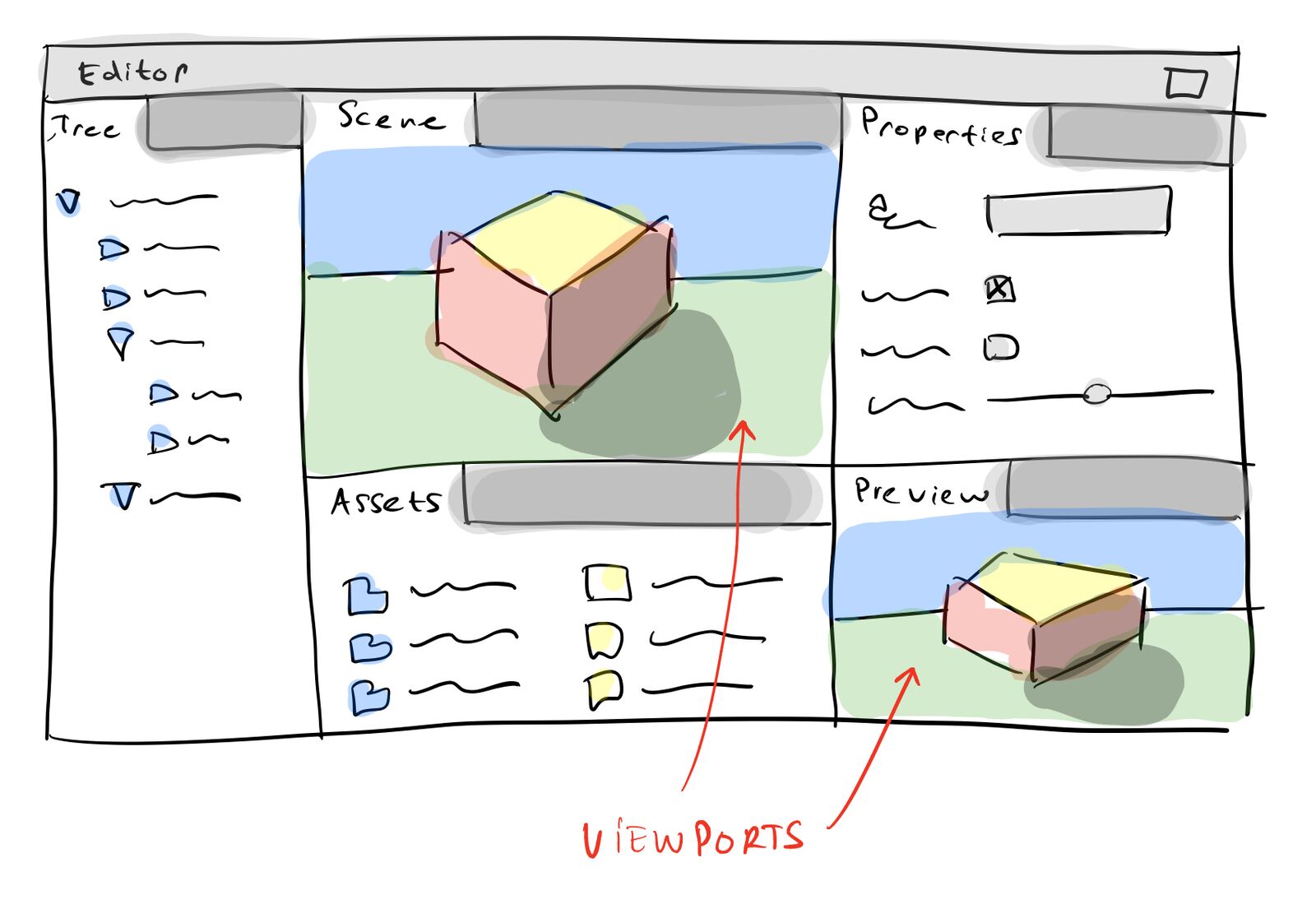

A typical editor frame.

The rendering of viewports happens in parallel. We start by gathering all visible editor tabs that have some form of embedded viewport, then for each viewport we launch a job responsible for rendering its contents. Input to the viewport rendering job is a Render Graph Module, the output render target and some camera parameters.

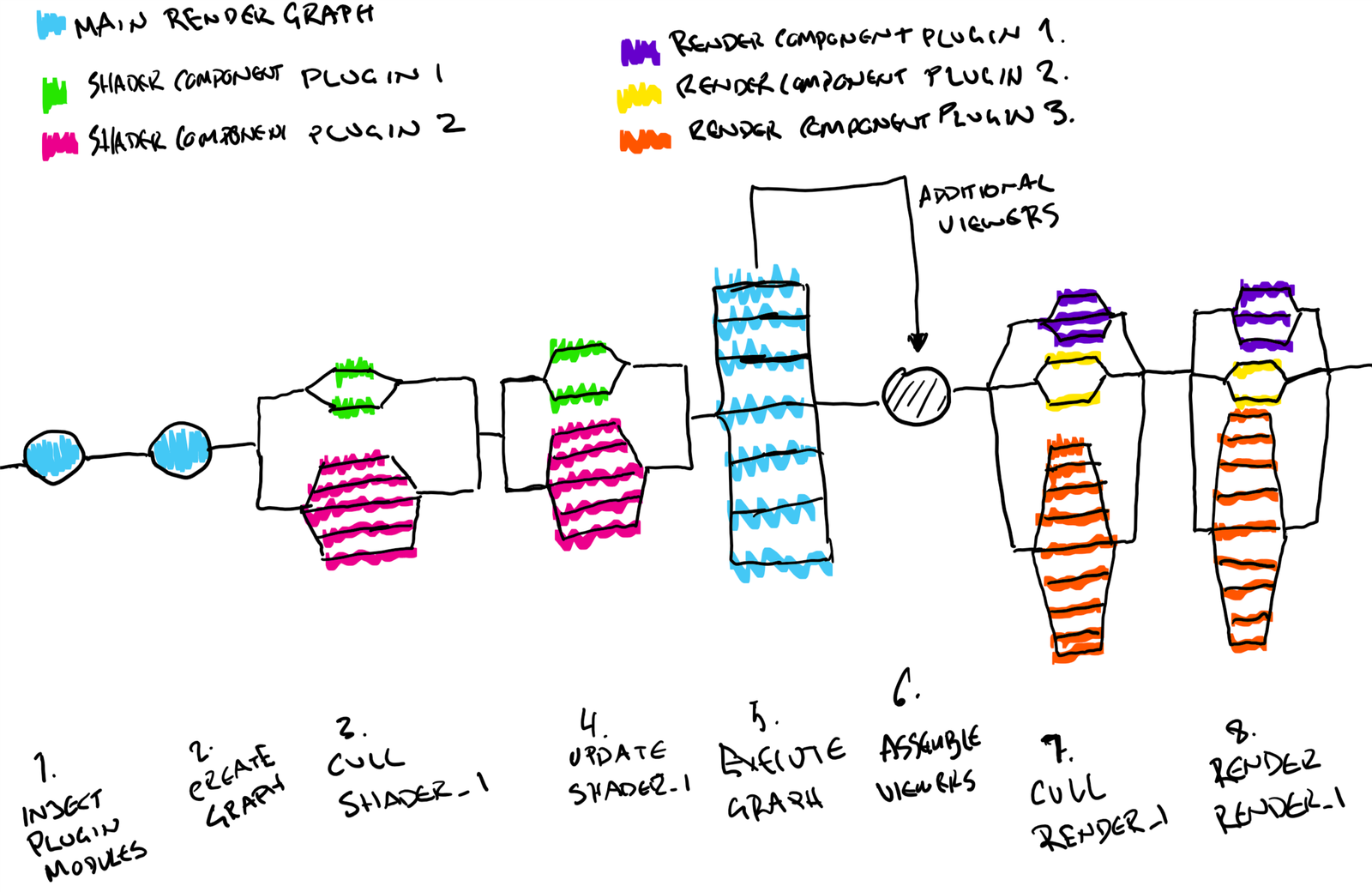

Then for each rendering job we do the following:

Extend the Render Graph Module associated with the viewport (main module) for any component plugin that implements the

tm_ci_shader_i::graph_module_inject()callback. This allows the plugin to append any number of additional modules to execute as part of the main module by injecting them at one of the extension points defined in the main module.Create the Render Graph instance from the main module.

Run view-frustum culling from the camera associated with the viewport (main camera) for any component plugins interested in introducing new cullable auxiliary objects (lights, reflection probes and similar).

Execute the

tm_ci_shader_i::update()function to let each component plugin generate whatever auxiliary data they want and expose the result to the Render Graph and/or globally through atm_shader_system_o(as described in this post). If the component plugin requested to be view-frustum culled, then each component will get its visibility result in a bit stream passed to the update function.Build and execute the Render Graph. At this point any input/output data to the Render Graph is known and we can figure out exactly what passes of the graph that really need to be executed, how to schedule their work on the graphics and compute queues (on one or potentially more GPUs), as well as establishing what synchronization points and resource barriers we need. During the execution of the render graph more viewers might get generated for rendering of things like shadow maps, reflections and similar. These viewers are registered to the render graph instance.

Assemble the final array of scene viewers, the main camera plus any additional viewers that were generated during the render graph execution. Each viewer has knowledge of:

A 64-bit sort key specifying when during the GPU frame the renderable objects seen from the viewer should be rendered.

View dependent data that the shader assigned to the renderable object needs to render correctly. Typically this is just the camera settings exposed through a tm_shader_system_o, but it can include any other resources as well.

A 64-bit visibility mask categorizing the viewer. Used for handling user defined visibility settings.

Camera settings for view-frustum culling.

For each component plugin implementing the culling callbacks of a

tm_ci_render_iwe now run view-frustum culling. The view-frustum culling runs for all cameras associated with the viewers that we assembled in step 6, at once.For each component plugin implementing the

tm_ci_render_i::render()callback we now have everything we need to render all renderable objects defined by its components, from all viewers, in a single pass over the component data. Similar to howtm_ci_shader_i::update()worked, if the component plugin requested to be view-frustum culled (in step 7) each component will get its visibility result in a bit stream passed to the render function, but since we now might have more than one viewer the visibility bit-stream is interleaved, holding one visibility bit per viewer.

The final output from the viewport rendering job is a number of resource command buffers and command buffers that later gets submitted to the rendering backend who’s responsible for translating the commands to graphics API calls.

It’s worth noting that all of the above steps, except 1, 2 and 6 runs in parallel for each component plugin (and depending on workload we further split and go wide when processing the data):

Data flow through the renderer.

Once all rendering jobs for the viewports are done we move on and render the editor UI for each window and finally present the swap chain.

That’s it. So far, I’m feeling really happy with this architecture as it makes it easy to efficiently implement rendering algorithms that traditionally can be hard to express in a self-contained way inside a plugin, without having to touch “core” rendering systems. My personal pet peeve are algorithms in need of somehow rendering the scenes into additional views (like shadows, reflection, voxelization, etc) something that has been rather complicated to implement efficiently in previous renderers I’ve worked on but now has become super trivial.

I also like that on an engine level this architecture has very few predefined

behaviors and objects. There’s no awareness of things like meshes, lights

sources, particle systems, terrains, whatnot. All those concepts are built on

top of the tm_ci_shader_i and tm_ci_render_i interfaces and its code can be

completely self-contained and isolated inside plugins.

I’m aware that I have skimmed over a bunch of implementation details with respect to the view frustum culling, visibility management, and so on. I did so both in the interest of making this post a bit easier to digest, but also because a lot of this code is still a bit in flux. In a later post I might revisit some of these systems in more detail.

Stay tuned…