Working on implementing the Render Graph system that I talked about in my last post got me thinking more about how to best feed the not-so-new-anymore graphics APIs (i.e. Vulkan and DX12) to run as efficiently as possible. So in this post and the next I will try to give some general advice on what I think is important to consider when designing a cross-platform renderer that runs on top of these APIs.

I’ve already covered the graphics API abstraction layer in the post “A Modern Rendering Architecture”, so if you haven’t read it already it’s probably a good idea to do so now as that provides an overview of the low-level design of the renderer in The Machinery.

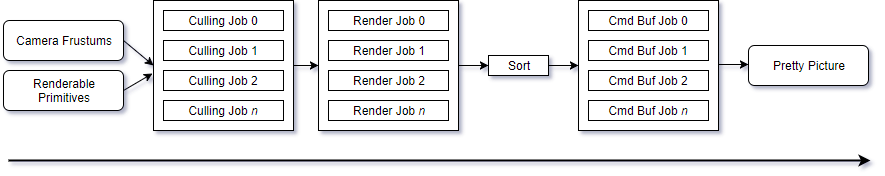

In today’s post we will look at an approach for achieving data-parallelism, in a clean and simple way, when rendering large worlds generating lots of draw calls.

Parallel rendering

Rendering in general is rather straight forward to parallelize as we typically have a clean one-way data flow. If we ignore propagation of any external state changes (such as time advancement, user input, etc) we will eventually end up with having a bunch of camera frustums we want to render views from, paired with one or many sets of renderable primitives (such as meshes, particles systems, etc.) that should be rendered into these views if they somehow contribute to the final image.

One-way data flow.

Unless some higher level system needs feedback from the rendering there’s nothing going in the opposite direction. And even if there is, the amount of data travelling up stream is typically very limited in comparison, making it less important to design for massive throughput in that direction.

The key to make it simple to extract data parallelism from a rendering flow like this is to limit and plan for any kind of state mutation of the objects a head of time. This might not be a problem even if you think about the frame flow in a very serial manner, e.g:

- Cull light sources against main camera frustum.

- For each shadow casting light source.

- Cull shadow caster against light camera frustum.

- Render visible shadow casting renderables.

- Cull renderables against main camera frustum.

- Render visible renderables.

- ..and so on until everything has been rendered..

However there are at least two problematic scenarios to consider with this approach:

- What if you want to skip evaluating some computation or updating some resource based on the culling result, i.e. if the renderable is visible or not. Example: calculation of skinning matrices.

- What if you have a resource that wants different state depending on camera location. Example: LOD of a particle system.

In both these scenarios the problem is that the state of the objects needs to mutate based on the result of the culling and/or location of cameras. And this state mutation happens while any number of jobs might be visiting the same object. If you follow something similar to the naive serial breakdown described above and don’t plan for this, the code easily becomes complex and inefficient due to the various state tracking mechanisms that needs to be put in place in both the renderer as well as the render backends to guarantee object consistency.

To better explain this let’s take a closer look at the second scenario from the example above. Let’s consider a particle system that wants different geometry representations based on camera position due to some kind of Level-Of-Detail mechanism. Now, let’s assume that this particle system will be rendered using a material that writes into two different layers when viewed from the main camera, say e.g. the “g-buffer” layer and “emissive” layer. On top of that it also casts shadows and the shadows happens to be configured to render in between the g-buffer and emissive layers. In this case you end up with something like this in the back-end:

- Render from main camera into g-buffer render targets, geometry state 0

- Render from shadow camera 0 into shadow map, geometry state 1

- Render from shadow camera 1 into shadow map, geometry state 2 (and so on for each shadow camera)

- Render from main camera into emissive render target, geometry state 0

Implementing state management for something like this easily becomes messy, especially when everything is running in parallel.

A cleaner approach

Fortunately there’s a straight forward and simple solution to this, well — at least if you are writing a renderer from scratch, retrofitting this approach to an existing large code base would probably be a bigger challenge.

The core idea is to simply stop visiting the same renderable object more than once per frame. If we can guarantee that no one else is visiting the same object at the same time we can also safely mutate its state. To achieve this you’ll need a mechanisms that allows you to completely decouple GPU scheduling of draw calls / state changes from CPU scheduling. We do this using a 64 bit sort key that we pair with every render command, I’ve covered this before [2] so I won’t go into any details how its done. However it’s important to understand that it is crucial to have something like this in place or else you are stuck in some kind of semi-immediate mode that makes implementing this approach much more complicated than it has to be.

The way to structure your code is to have each system in need of rendering various objects (i.e. systems for regular object rendering, shadow mapping, cube map generation, etc) expose an interface for retrieving what cameras it wants to render from, what set of renderable objects each camera is interested in, and optional sort keys for each camera.

When all the viewing cameras have been gathered we associate each camera with a bit in an arbitrary long bit mask controlled by the culling system. We then run the culling system for all object sets against all interested camera frustums. Exactly how this is implemented doesn’t really matter as long as you end up with having an output from the culling system where each object holds the visibility result per camera represented as a bit in a bit mask.

We then filter the output from the culling system to eliminate all objects from the list not intersecting any camera frustums. What we end up with is a unique list of renderable objects that intersects one or many of the cameras. By looking at the number of intersecting cameras and having an understanding of the computational complexity of the various types of renderable objects we can derive some kind of load balancing metric for how to split the list of renderable objects into well balanced jobs (i.e. we are striving for our jobs to take approximately the same time to execute).

Within the jobs we then call the render function on each object and pipe in an array of cameras with optional sort keys as parameter. As we now can be certain that no other jobs are touching the state of the object we can safely mutate its state and update any resources (skin matrices, geometry, whatnot) that might be in need of updating.

By restructuring our code like this we not only solve the state tracking issues when updating resources, we also make sure to never visit a renderable object more than once per frame making this approach more cache friendly than a more traditional serial approach where you typically end up visiting the same object multiple times during a frame. At the same time, we also made it easier for people plugging in new types of renderable objects into the platform as they no longer have to guarantee that their render callbacks are state immutable.

Next time

Next time we will look at some things related to various render state changes that we do in our tm_renderer_command_buffer_i interface to make it map nicely to Vulkan and DX12.